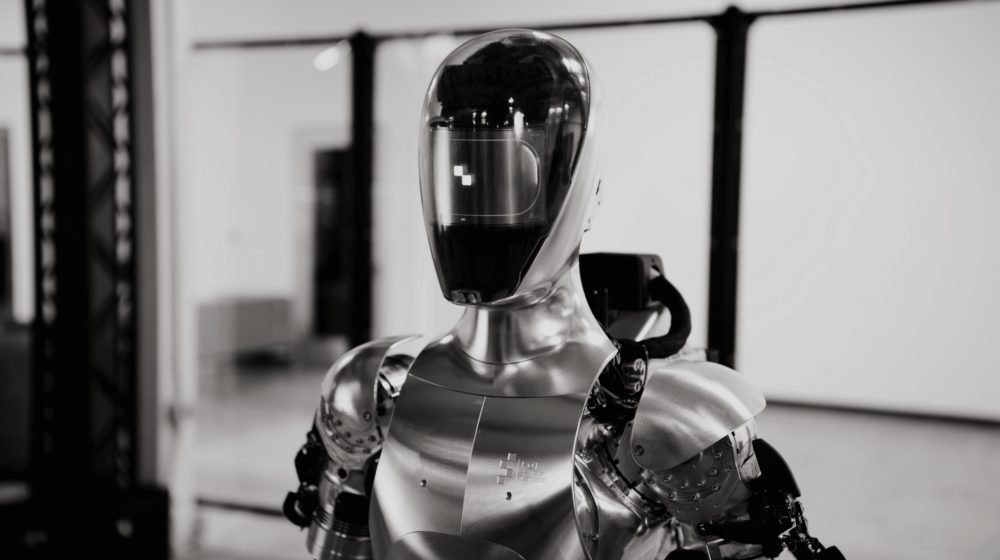

ChatGPT maker OpenAI and AI robotics startup Figure AI have come together to create a humanoid robot that can engage in comprehensive conversations, as well as plan and carry out its tasks. OpenAI and Microsoft have invested heavily in the robotics startup as well.

This advancement is made possible through the integration of the robot with a multimodal AI model developed by OpenAI, which is proficient in interpreting both images and text.

Thanks to this AI model powering the robot called Figure 1, it is able to describe its environment in detail, understand daily tasks and occurrences, and respond to tasks that are notably nuanced and dependent on the surrounding context.

Figure AI claims that all actions shown in the demonstration video below are not remote-controlled, but rather learned by the robot. Additionally, the video is not sped up to make the robot’s actions look more impressive than they actually are.

Corey Lynch, robotics and AI engineer at Figure shared his excitement for Figure 01 saying: “Even just a few years ago, I would have thought having a full conversation with a humanoid robot while it plans and carries out its own fully learned behaviors would be something we would have to wait decades to see. Obviously, a lot has changed.”

Comparable advancements in robotics have previously been showcased by Google through its RT models. These innovations enable a robot to traverse common environments and undertake sophisticated tasks, guided by the interactions of language and image models. However, Google’s prototype robots exhibited lesser conversational capabilities.

Lynch delves into the specifics of the robot’s functionalities, highlighting its ability to articulate what it sees, strategize upcoming moves, ponder over past experiences, and verbally articulate the reasoning behind its decisions leading to certain actions.

This is because OpenAI’s multimodal AI is able to access and take into account the robot’s full conversation history, including images, allowing Figure 01 to act and respond with context. This same model also determines the appropriate learned behavior the robot should employ to fulfill a specific command.

Control over the robot’s movements is exerted through devices known as visuomotor transformers. These units directly convert visual inputs into physical actions. The system processes visual data from the robot’s cameras at a 10 Hz rate, and it orchestrates actions that involve 24 degrees of freedom, such as adjustments in wrist positions and finger angles, at a 200 Hz rate.