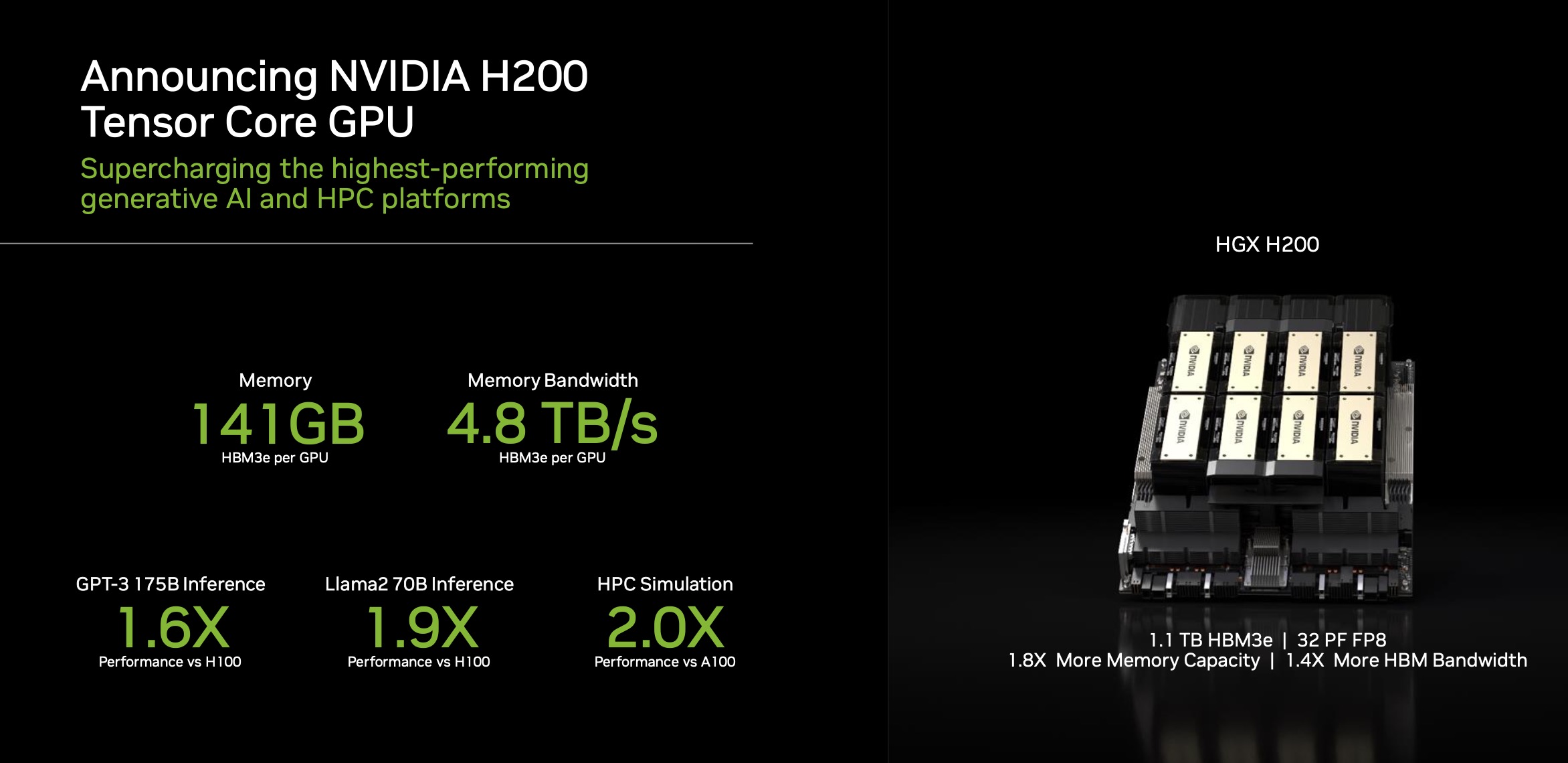

The global AI industry is in for a major performance boost in the near future as Nvidia has announced its newest powerhouse GPU for AI purposes, the HGX H200. As the name says, this is a step above the highly demanded H100, but with 1.4x times more memory bandwidth and 1.8x more memory capacity.

This new GPU is based on Nvidia’s Hopper architecture and features the Nvidia H200 Tensor Core GPU which will be able to handle even more generative AI processing loads.

Specs and Performance

This is the first GPU of its kind to offer HBM3e, a new type of memory that is not only faster, but much larger as well. The H200 is loaded with 141GB of memory at 4.8 terabytes per second, which is twice as much as its predecessor, the Nvidia A100. It also has 2.4 times more memory bandwidth than the A100.

Ian Buck, VP of hyperscale and HPC at Nvidia said: “To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory. With Nvidia H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

The H200 will allow for significant performance enhancements, notably achieving almost double the inference speed on Llama 2, a 70 billion-parameter LLM, in comparison to the H100, and future software updates are expected to improve these figures even more.

The HGX H200, configured with eight paths, provides over 32 petaflops of FP8 deep learning computing capability and an aggregate high-bandwidth memory of 1.1TB. This guarantees exceptional performance in both generative AI and HPC applications.

Compatibility

The new HGX GPU is designed to be fully compatible with the systems that are already running the H100 since it is based on the same server boards with four and eight-way configurations. This means that companies will have no issues switching over to the H200.

Availability

Speaking of which, the first few companies to offer the new Nvidia H200 in its cloud systems include Amazon, Google, Microsoft, and Oracle. The hardware is expected to roll out during the second quarter of 2024.

As for the price, the H200 is expected to be equally expensive as the H100. Nvidia hasn’t officially revealed any price tags, but according to reports from CNBC, the H100 was typically selling between $25,000 – $40,000. Thousands of these are needed to operate at the highest levels, which is why Nvidia’s business has been booming lately.