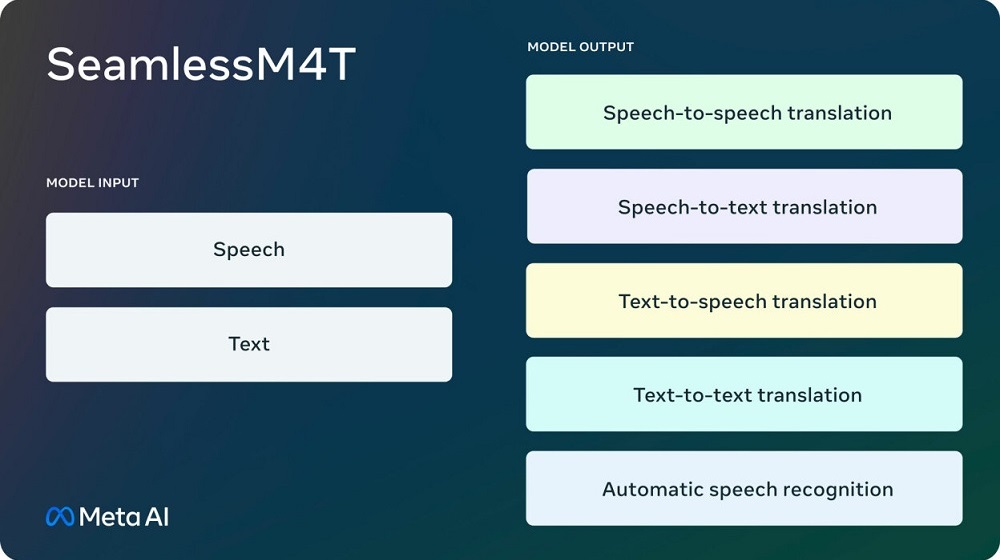

After releasing a free open-source AI model Llama 2 for commercial use and an image generation model CM3leon last month, Facebook-parent Meta is back with another major AI announcement. The social media company has released SeamlessM4T (Massively Multilingual and Multimodal Machine Translation), an all-in-one multimodal multilingual translation and transcription model that it claims is the first of its kind.

The model offers text and speech translation for nearly 100 languages, including English, Chinese, French, Arabic, Hindi, and Urdu, as announced by the company on Tuesday. Meta has labeled the model a significant breakthrough in speech-to-speech and speech-to-text translation and transcription.

The speech recognition, speech-to-text translation, and text-to-text translation works for nearly 100 languages, and speech-to-speech translation supports about 100 input languages and 36 output languages. Text-to-speech translation also supports almost 100 input languages and 35 output languages. The complete list of languages can be seen here. The multimodal approach, Meta said, reduces errors and delays and increases the efficiency and quality of translations.

Similar to Meta’s previous model releases earlier this year, SeamlessM4T is also being made available under a research license, enabling researchers and developers to build upon this work. Alongside the model, Meta has also released its multimodal translation dataset, SeamlessAlign.

The model is available for trial through a research demo hosted on Meta’s website, allowing users to test it with 2 to 15-second-long clips as input. We played with it and it works well. In our various attempts, it took 30 to 60 seconds to generate text and speech translations, and it mostly got them right. The translations were not highly conversational but still impressive, especially considering it’s the model’s first version.

SeamlessM4T was trained using tens of billions of sentences and 4 million hours of speech that were scraped from publicly available open or licensed sources on the web.