Meta, the parent company of Facebook, has unveiled CM3leon (pronounced as chameleon), a new single foundation generative AI model for images. Unlike some of the previous models which are either specialized in text-to-image or image-to-text tasks, CM3leon is a casual masked mixed-modal (CM3) so it can use text and images to generate responses (both in form of an image or text) based on other image and text content. Some use cases include generating images from prompts, editing images using prompts, generating captions or answer questions about an image.

The popular AI image generation tools, including the likes of Midjourney, Stable Diffusion, and Dall-E mainly rely on diffusion models. CM3leon is however using a tokenization-based autoregressive model. It has allowed Meta to achieve what it says is state-of-the-art performance for text-to-image generation despite the model being trained with five times less compute than previous transformer-based models.

“When comparing performance on the most widely used image generation benchmark (zero-shot MS-COCO), CM3Leon achieves an FID (Fréchet Inception Distance) score of 4.88, establishing a new state of the art in text-to-image generation and outperforming Google’s text-to-image model, Parti. This achievement underscores the potential of retrieval augmentation and highlights the impact of scaling strategies on the performance of autoregressive models,” said the company in its announcement post.

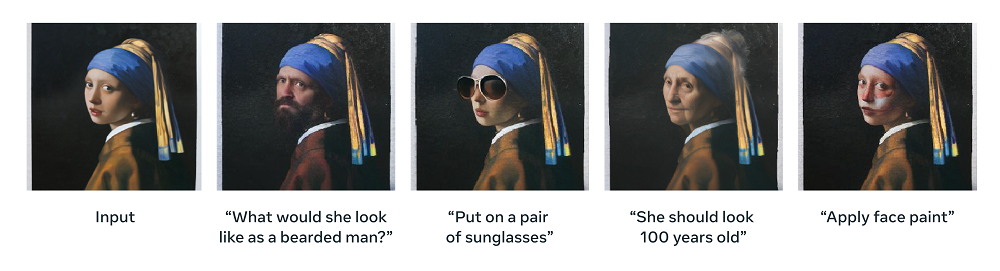

Here is one of the examples they’ve shared in the announcement highlighting how an image can edited using prompts.

Meta has trained the model using a licensed dataset from Shutterstock. It is not the first big breakthrough in generative AI by the company this year. The company last month had also revealed Voicebox, a generative AI model for speech generation.