On Monday, September 25, OpenAI introduced new image and voice features for GPT-4, making it their most advanced multimodal model. The multimodal version, officially named GPT-4V(ision), can now accept multiple modalities of input, including text, images, and voice, and is being rolled out to paying subscribers of ChatGPT.

Its remarkable performance across various use cases has already left people in awe and astonishment. Many of them have taken to platforms like X (formerly Twitter) and Reddit to share demos of what they’ve been able to create and decode using simple prompts in this latest version of OpenAI’s chatbot.

What is GPT-4V(ision)?

GPT-4 with vision, or GPT-4V allows users to instruct GPT-4 to analyze images provided by them. The concept is also known as Visual Question Answering (VQA), which essentially means answering a question in natural language based on an image input. As we discussed above, it makes GPT-4 a multimodal model, “Multimodal LLMs offer the possibility of expanding the impact of language-only systems with novel interfaces and capabilities, enabling them to solve new tasks and provide novel experiences for their users,” notes OpenAI in its paper on GPT-4V.

GPT-4V announcement and launch

OpenAI showcased some features of GPT-4V in March during the launch of GPT-4, but initially, their availability was limited to a single company, Be My Eyes. This company aids individuals with visual impairments or blindness in their daily activities via its mobile app. Together, the two firms collaborated on creating Be My AI, a novel tool designed to describe the world to those who are blind or have low vision. They tested the tool with 200 blind and low vision users from March to August 2023, expanding the testing to 16,000 users by September.

GPT-4V(ision) underwent a developer alpha phase from July to September, involving over a thousand alpha testers. The new vision feature was officially launched on September 25th.

Rollout and availability

GPT-4V(ision) has been gradually rolling out to Plus and Enterprise subscribers of ChatGPT since its launch announcement. Many paying subscribers currently do not have access, but they are expected to receive it in the coming weeks. It is available on all platforms, including web, iOS, and Android.

OpenAI has not shared the details but said that image and voice features will also be made available for the free users of ChatGPT in the future.

Logan Kilpatrick, OpenAI’s Developer Relations advocate has confirmed that the GPT-4V(ision) will also be made available for developers through APIs at a later date, without sharing the specifics.

How to use the new image feature on ChatGPT

You can use the image feature on both ChatGPT’s mobile and web app. To use it on your phone, click on the camera icon located on the left side of the message bar to capture a new photo. Alternatively, you can browse through the images on your phone and upload them.

It allows you to upload multiple images at once. If you want the chatbot to focus on something specific in the image, simply encircle it before sending.

On desktop, simply click the image icon on the left side of the message bar to browse and upload images from your computer, enabling the chatbot to analyze them and answer your questions about the images.

GPT-4V(ision) use cases

Here are some of the most popular uses cases, with multiple examples, for GPT-4V(ision) that we’ve come across on different social media platforms, and the internet.

1) Turning mockups into live websites and code

The biggest use case that has emerged, at least from the early posts on X and websites, is GPT-4V’s ability to turn drawings, mockups, and designs into live websites and code, making frontend development easier. We’ll have to wait to draw any conclusions on how useful it actually is, but the early results look promising.

Example 1: In the video below, an X user, Mckay Wrigley, uses ChatGPT’s newly launched image feature to create a functional dashboard from a design mockup. As he explains in the video, the chatbot doesn’t get everything right but does a fairly good job.

Prompt: You are an expert UI/UX designer and software developer. Break down this SaaS dashboard into components. You are going to pass this plan to a software dev on your team to build.

Example 2: In this second example, the same author uses a Figma component and turns it into code, using GPT-4 Vision. He uses a relatively longer prompt, with step-by-step direction, for this particular example, with some example code. The author explains in the video that the rendered component is not a 1:1 replica but it ‘pretty much gave’ him what he ‘asked for’.

Example 3: In this third example, the same author is able to turn a whiteboard mockup in a functional web page.

Prompt: You’re an expert software developer. This was my team’s whiteboarding session for our onboarding flow. You need to write code for this in Next.js + Tailwind CSS. Take a deep breath and think step-by-step about how you will do this. Now write the complete code for this working one step at a time.

2) Identifying objects: medicines, cables, and more

GPT-4, with its new powers, can now also identify just about any object, and explain all the relevant details about it. This is perhaps the most important use case for daily life and shopping.

Example1 : A Wired reporter Reece Rogers shares image of a USB cable, with a very simple pormpt, asking ChatGPT what it was, and the chatbot got it right.

Example 2: The same author used the chatbot to identify a TP-Link smart plug.

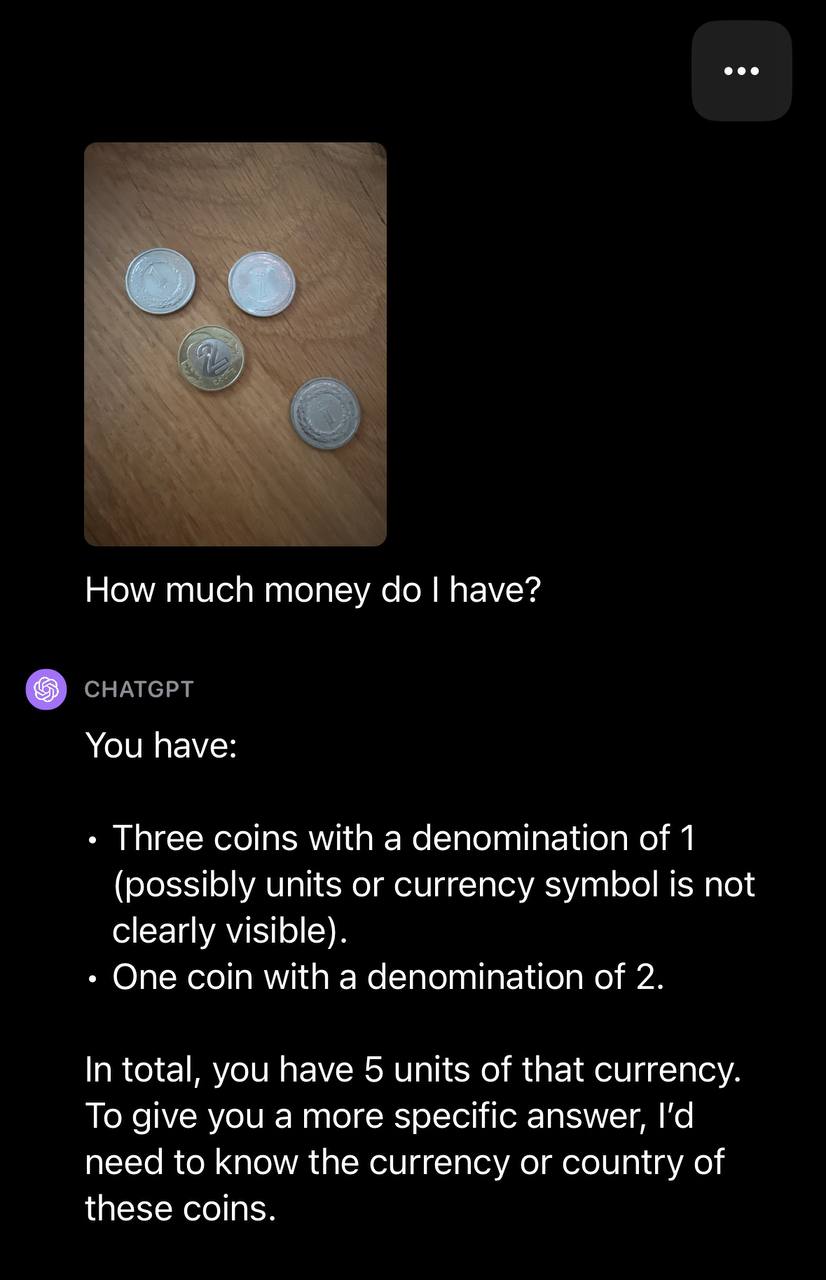

Example 3: Piotr Skalski, an X user, used GPT-4 Vision to identify a coin from an image.

He also asked the chatbot to count the money he had from a picture of four coins lying on the table. In his first attempt, GPT-4 identified the denomination of the coins but couldn’t determine the currency. However, with a follow-up question, the chatbot not only identified the currency but also calculated the total amount of money the author had.

3) Optical Character Recognition (OCR): Transcription and translation

OCR could be a significant use case for GPT-4V. The initial results are mixed, but with time, it should improve. It already appears to be much better than many options in the market, but in absolute terms, it still has a long way to go. Google’s Vision AI continues to be the gold standard for it.

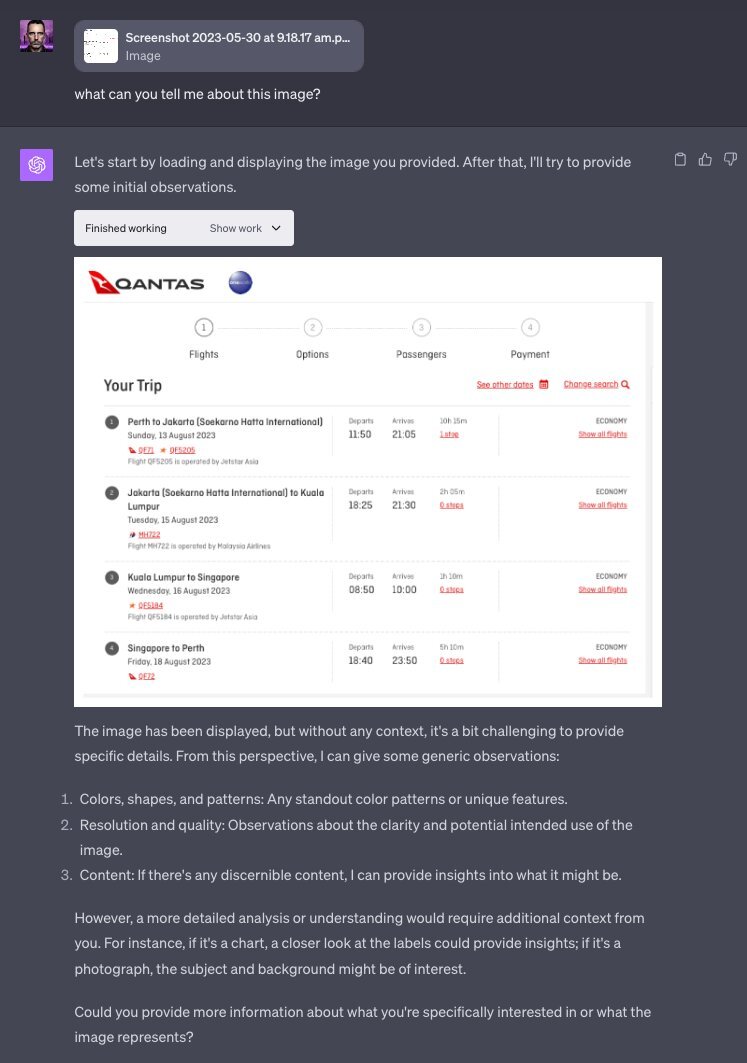

Example: The author, Anthony Spiteri, uploaded a screenshot of an air ticket and used ChatGPT to extract the flight details from it. The initial prompt, “What can you tell me about this image,” didn’t retrieve the details. However, when he followed up with a specific prompt, asking the chatbot about the flights in the image, it was able to provide the flight details.

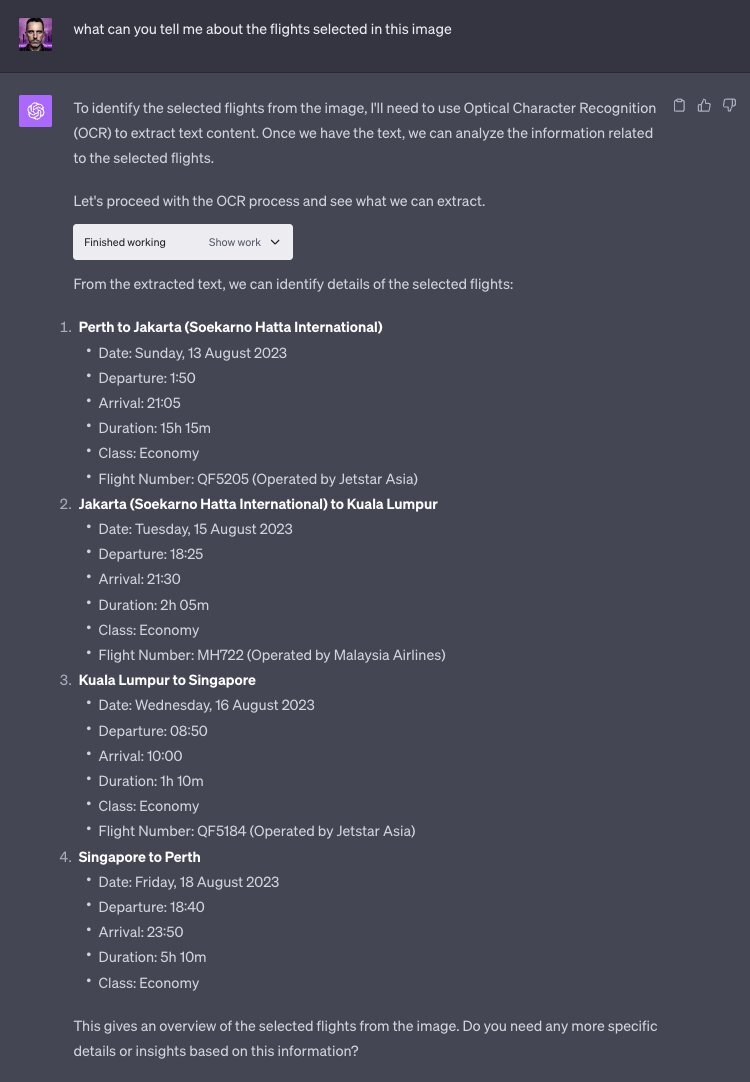

Example 2: Ethan Mollick, an Associate Professor at The Wharton School, use the new feature to transcribe and translate handwritten notes by “Hooke and an ancient Catalan drug manual about medicinal mummies and transcribed the handwriting with reasonable accuracy, and even translated from archaic Catalan to English.”

4) Interior design

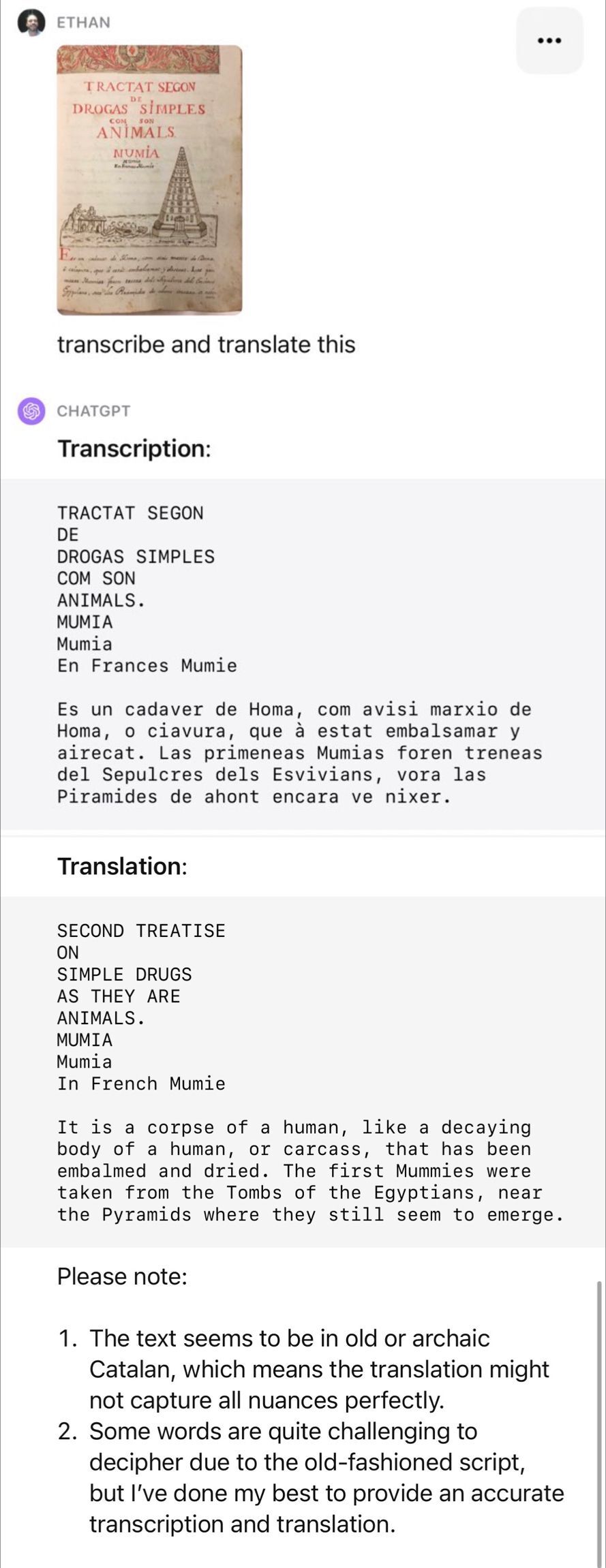

OpenAI’s image generation model, DALL-E, has already proven its usefulness in different aspects of architecture and interior design. Now, ChatGPT’s vision capability offers users advice on improving a room with just an input image.

Example: In the screenshot below, an X user, Pietro Schirano asked for help in improving his room. GPT-4 offered suggestions that, according to Pietro, were based on what the chatbot knows about him through custom instructions.

5) Identifying movies

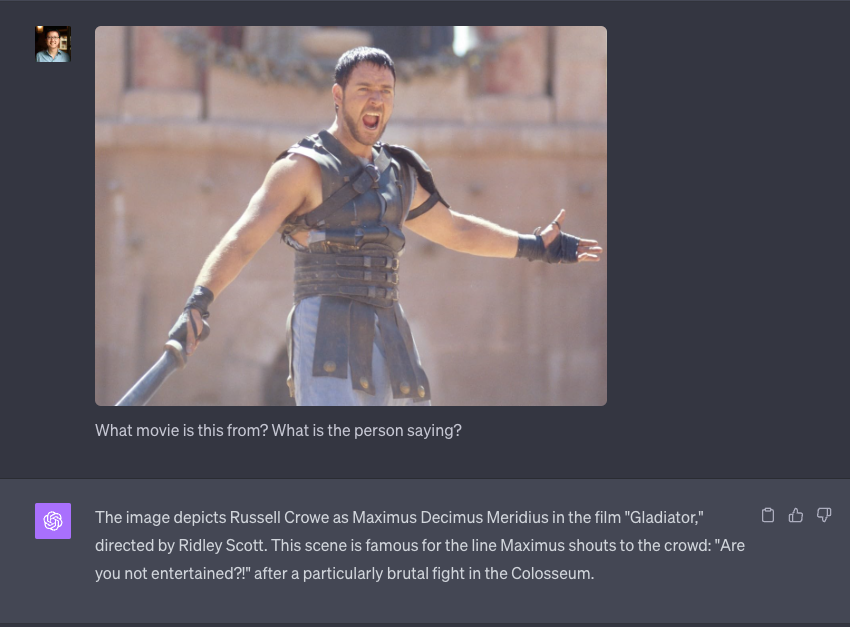

Shazam made it easy to identify and discover music by just listening to a clip of it. ChatGPT with its latest capabilities, can now identify a movie by analyzing an image from a scene in the film. Not just that, in some cases, it can even share the exact dialogue a character is saying in a particular scene just from an image of it.

Example 1: With a straightforward prompt “What movie is this? What is this person saying?” ChatGPT identifies the movie, names the character and the actor playing it, mentions the movie’s director, and proceeds to provide scene details.

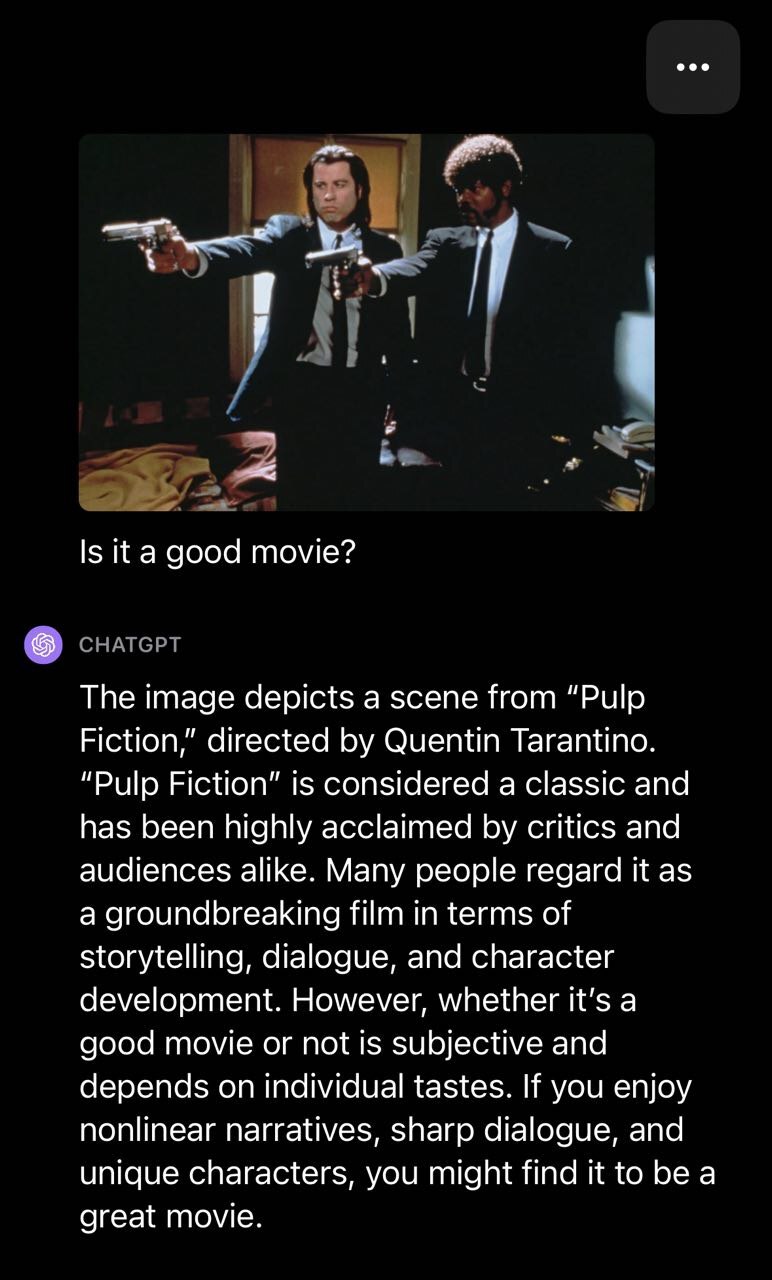

Example 2: In this second example, the author, Piotr Skalski, shares image from a movie scene and asks ChatGPT if it’s a good movie. The chatbot answers by providing details about the movie, and goes on to explain to the user that they would like the film if they have specific interests or preferences.

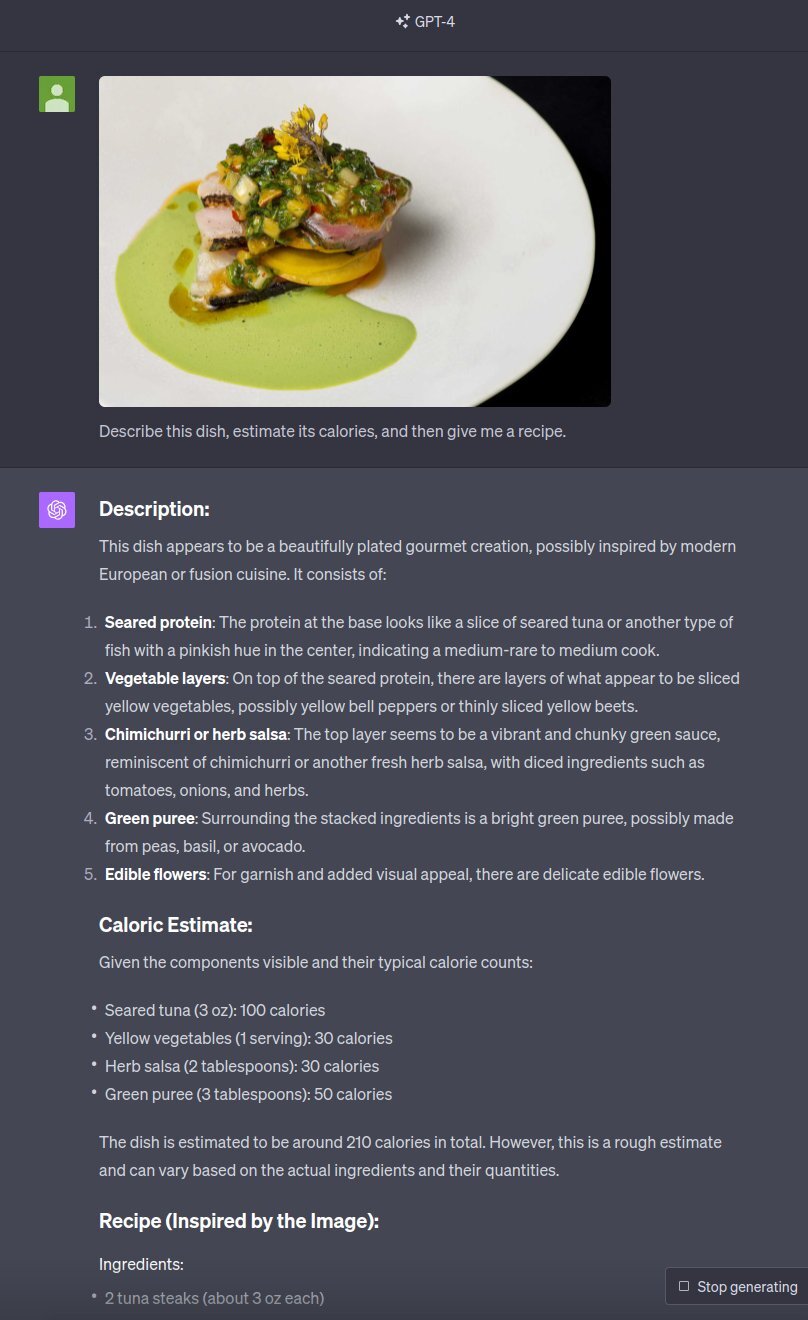

6) Turning food pictures into recipes

It’s still early, but there are promising signs that GPT-4V could become a valuable tool for meal planning. The chatbot can analyze pictures of food to share details of the dish, its recipe, and calorie estimation, though with mixed results.

Example: DT, an X user, attempted to upload pictures of multiple dishes to have the chatbot share their details. Here are the results.

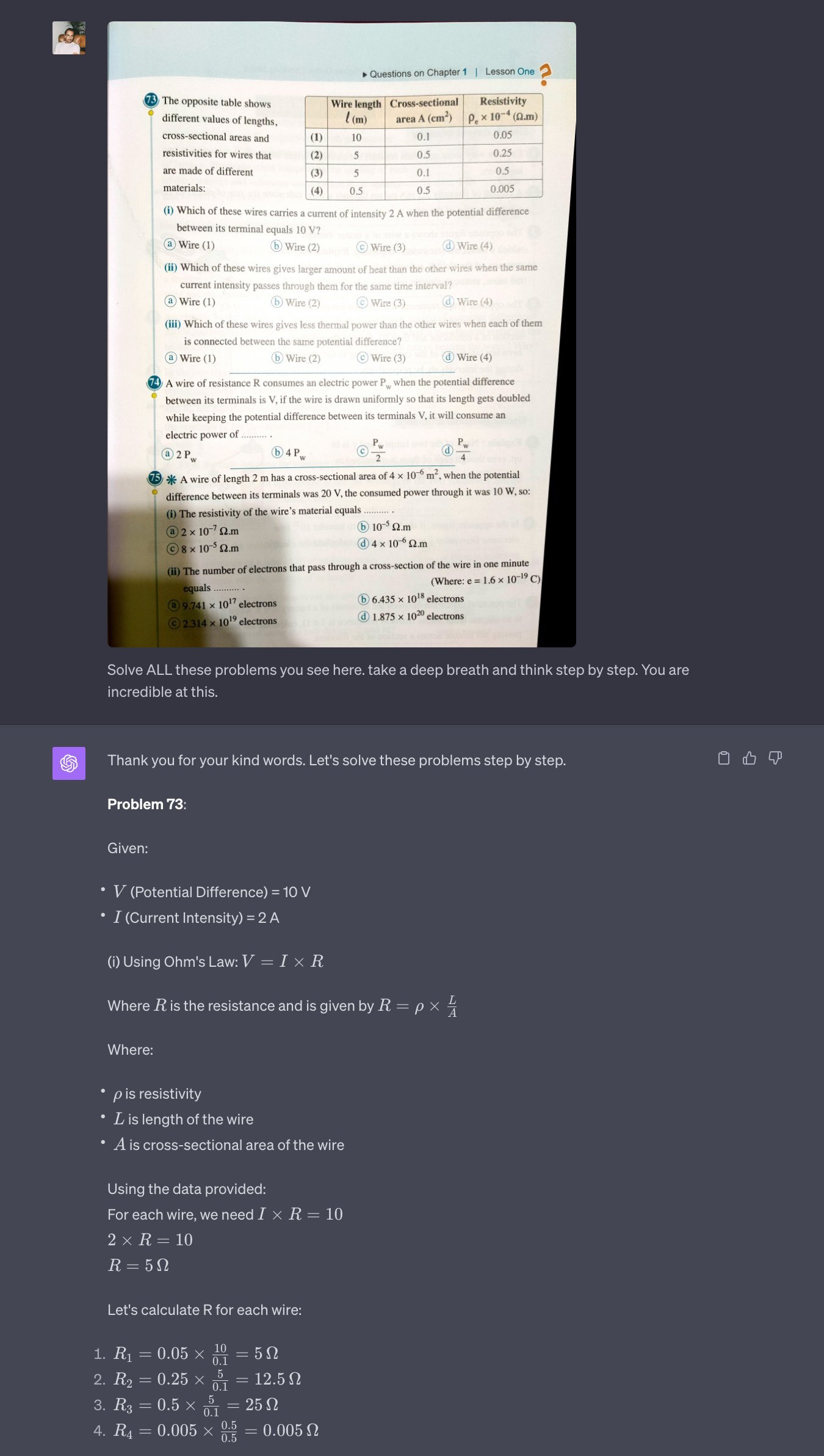

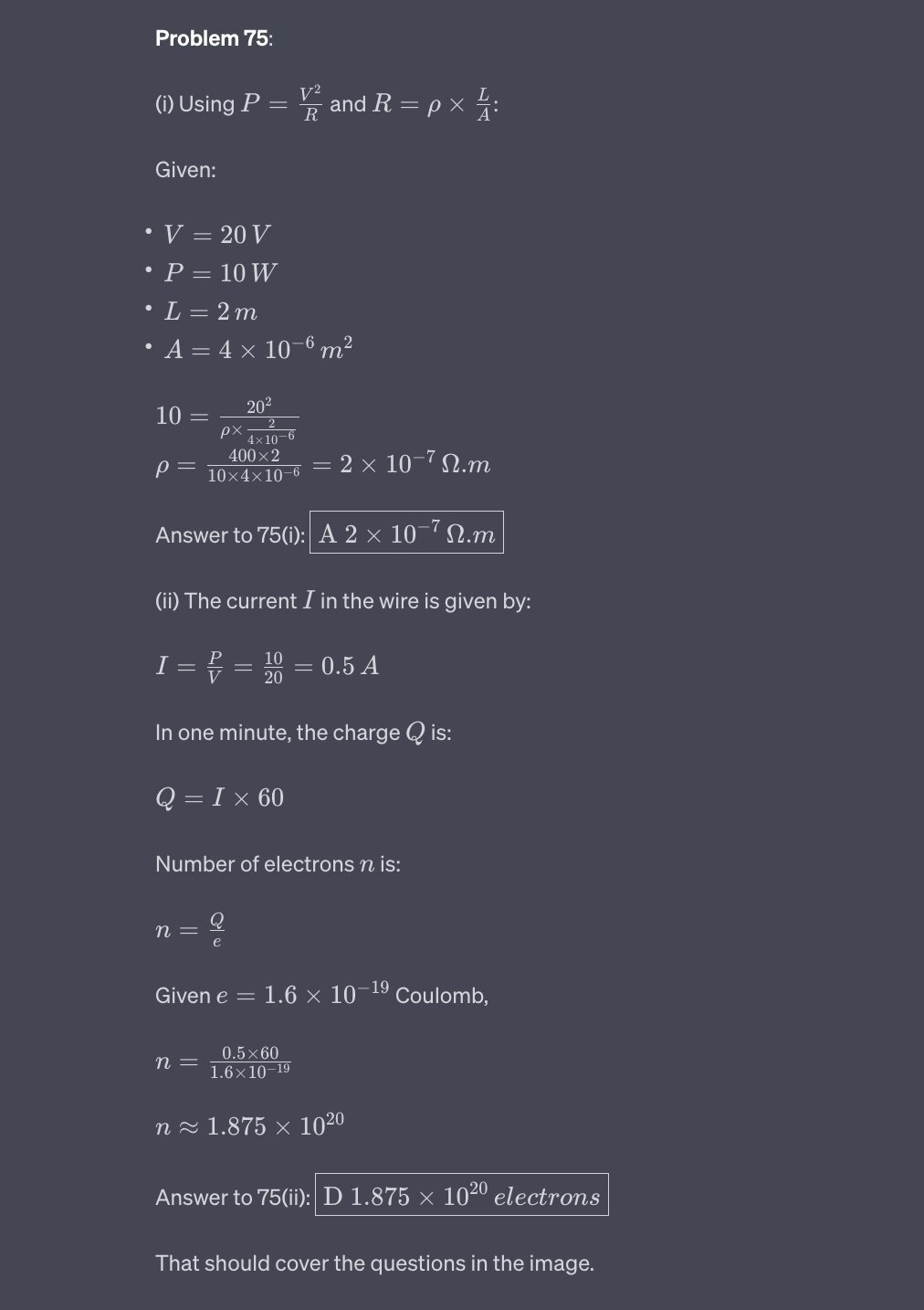

7) Help with studies

ChatGPT is already incredibly useful for students as it can assist them with a wide range of academic tasks, from researching and generating content to answering questions and providing explanations. But GPT-4V takes things to another level.

Example 1: Pietro Schirano on X was requested by another user to conduct a test involving multiple questions on a single page and have GPT-4V answer all of them. It did a pretty good job.

Example 2: Mckay Wrigley on X asked GPT-4V for assistance in understanding a diagram of a human cell, simulating the perspective of a 9th-grade biology student. The chatbot accurately listed all eighteen labels on the diagram and provided their explanations.

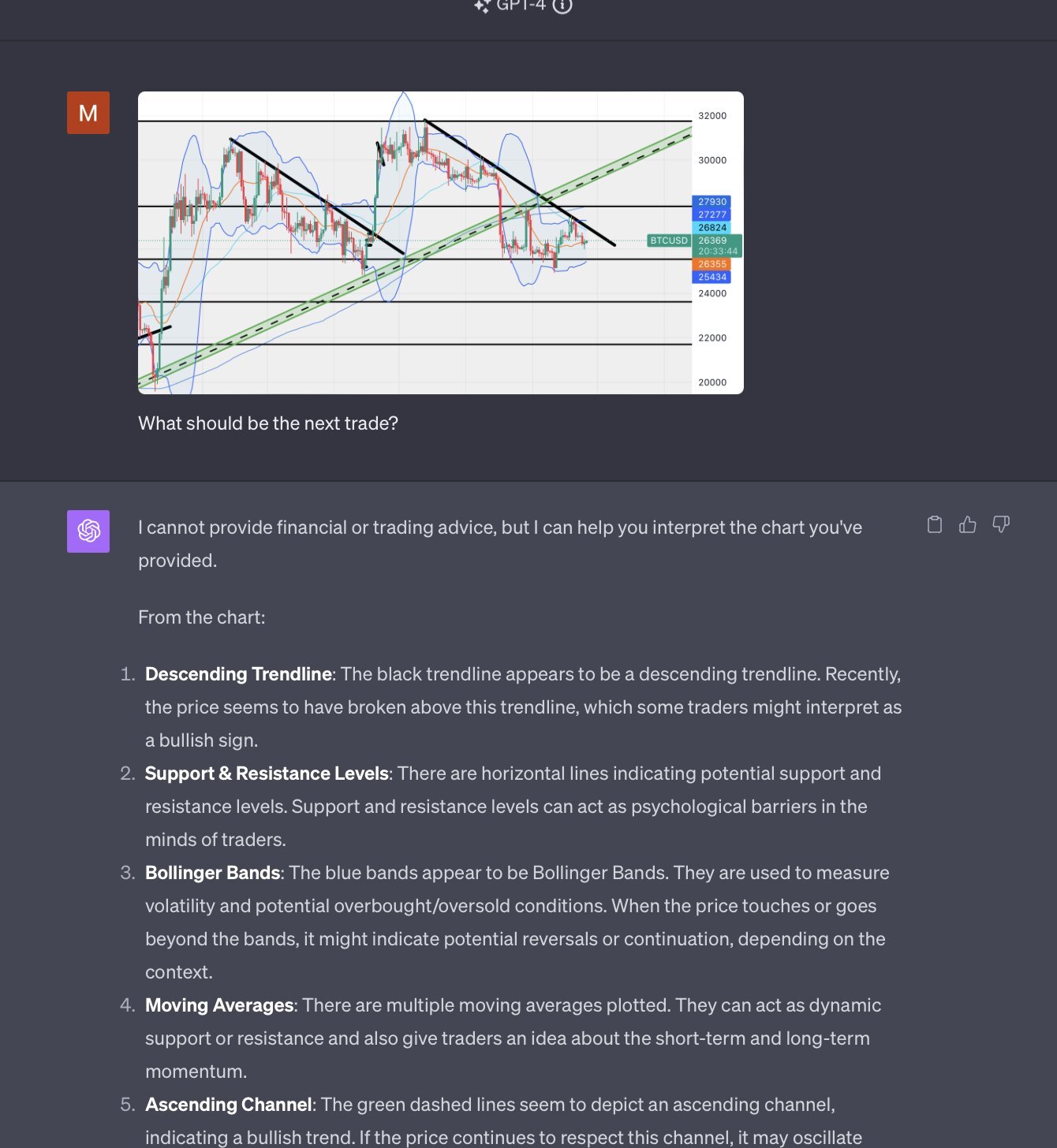

8) Decoding infographics, charts, and more

GPT-4V excels not only in deciphering diagrams but also in simplifying complex infographics, charts, and other visuals to enhance understanding for users.

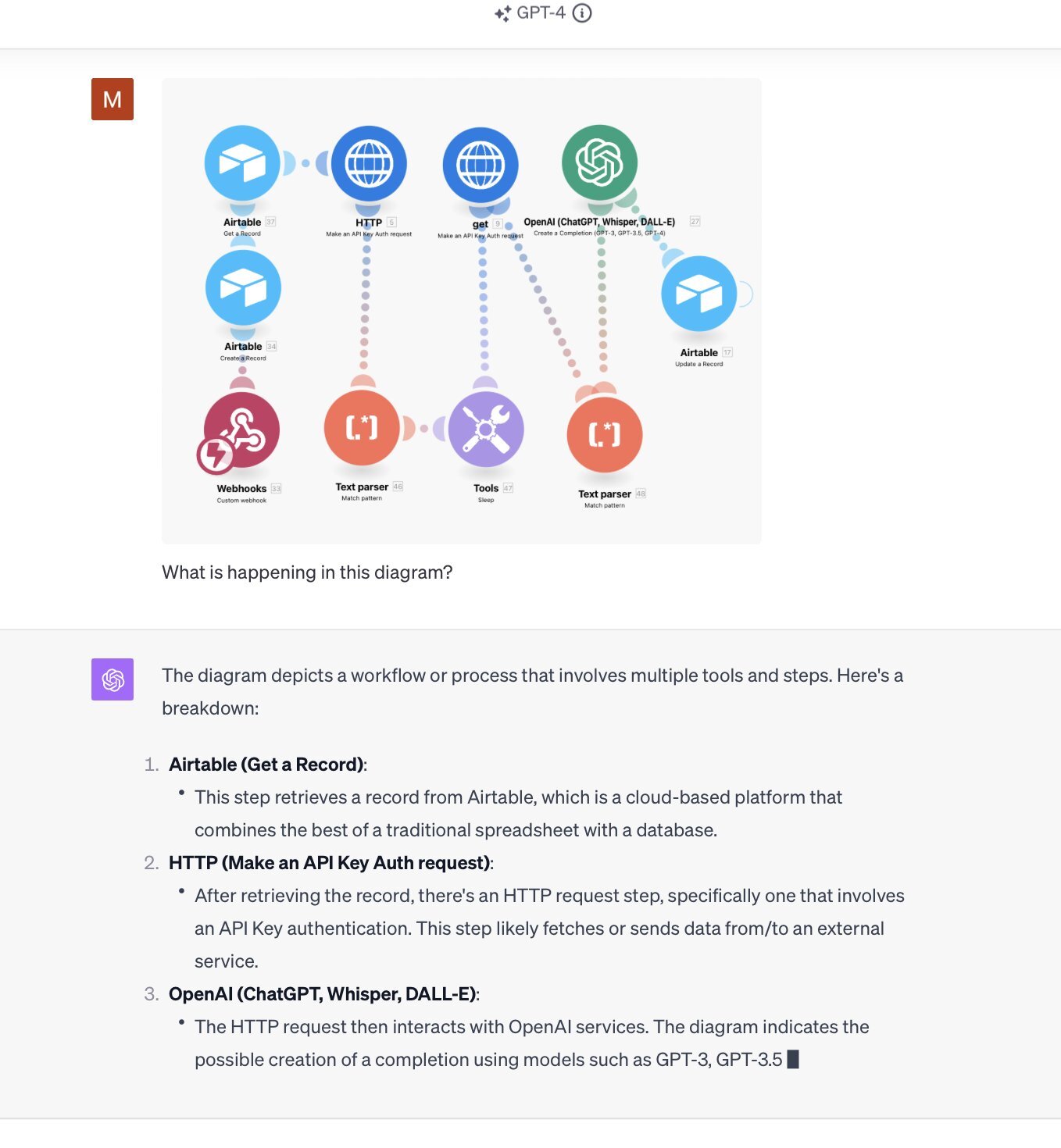

Example: In this example, Muratcan Koylan, is able to have the chatbot decode a workflow of different tech tools interacting with each other. It shares the breakdown of all of the tools and what task each of them is perfoming at different steps.

Example 2: In this second example, the same author was able to decode a financial chat. They wanted ChatGPT to recommend the next trade, but it refused to answer. However, it successfully broke down the chart for them.

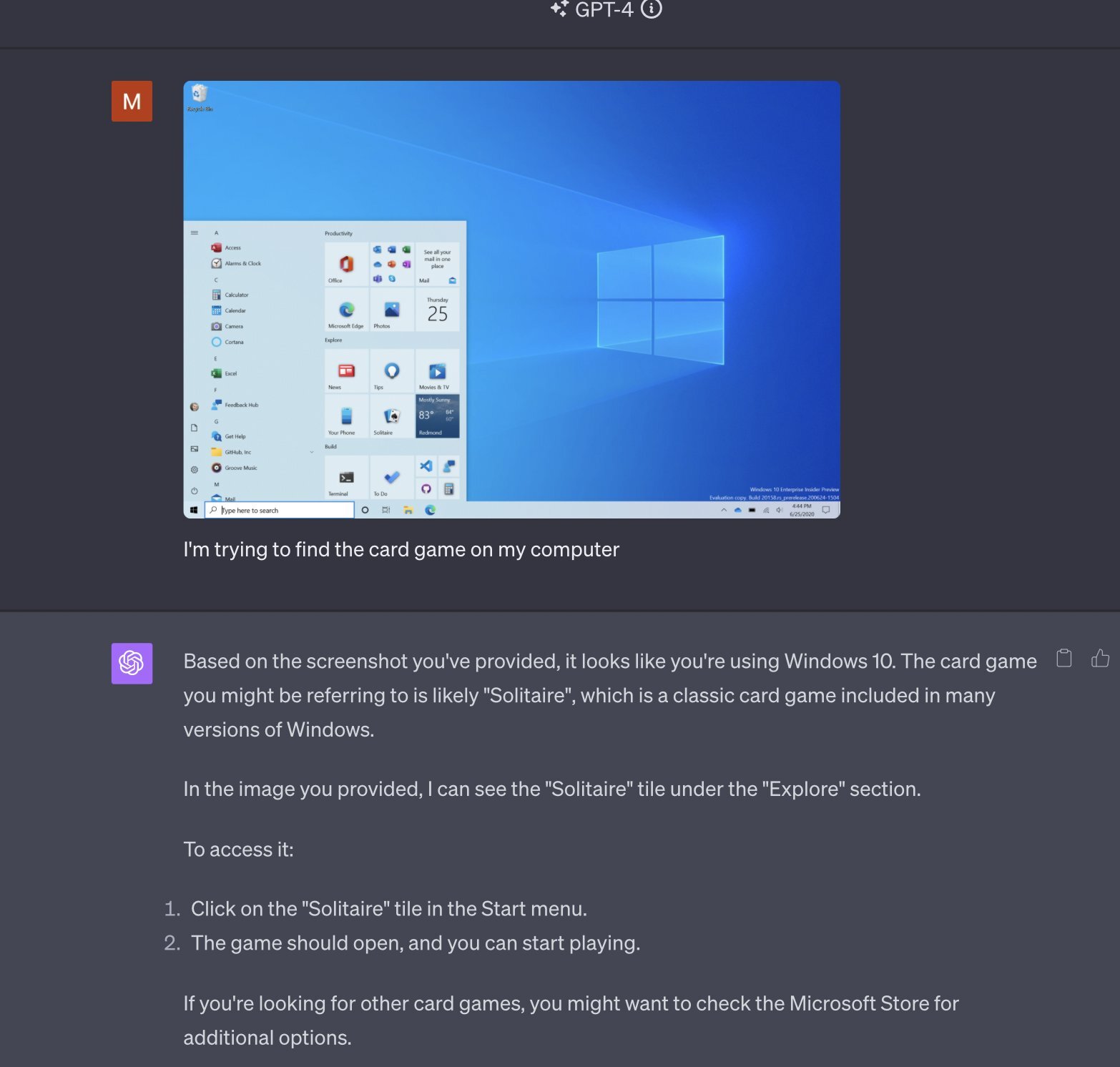

9) Troubleshooting and teaching software

This is perhaps one of the most interesting use cases of GPT-4V. It needs further testing but it seems that it can help you with troubleshooting for different things.

Example 1: The official launch announcement of the image feature by OpenAI featured an example in which the new feature was used to assist a user in lowering the seat of their bicycle through multiple prompts.

Example 2: An X user, Muratcan Koylan, asked ChatGPT to help him locate a card game on his computer by sharing a picture of his computer’s desktop and start menu. The chatbot successfully identified the operating system and then guided him through the process step by step to find the game.

There are many other great use cases of GPT-4 with vision across various domains, and we plan to do a part two to add them. Follow us on Twitter and LinkedIn to know when we do the part two.