Generative AI has been all the rage lately with nearly every enterprise looking to employ ChatGPT-like chatbots into their business or build their own. In a recent survey conducted by AI Infrastructure Alliance, nearly 700 out of 1000 enterprises had adopted Large Language Models (LLMs) as their top priority.

However, many organizations are facing challenges with adopting LLMs into their business, largely due to a lack of customizability, flexibility, the ability to preserve company knowledge, or the heavy cost requirements.

This is where Giga ML comes in, a fresh startup that aims to address the affordability and privacy limitations of AI chatbots by deploying offline LLMs. Giga ML was founded by Varun Vummadi and Esha Manideep and it aims to lets companies deploy LLMs on-premise.

Vummadi said while talking in an interview with TechCrunch: “Data privacy and customizing LLMs are some of the biggest challenges faced by enterprises when adopting LLMs to solve problems. Giga ML addresses both of these challenges.”

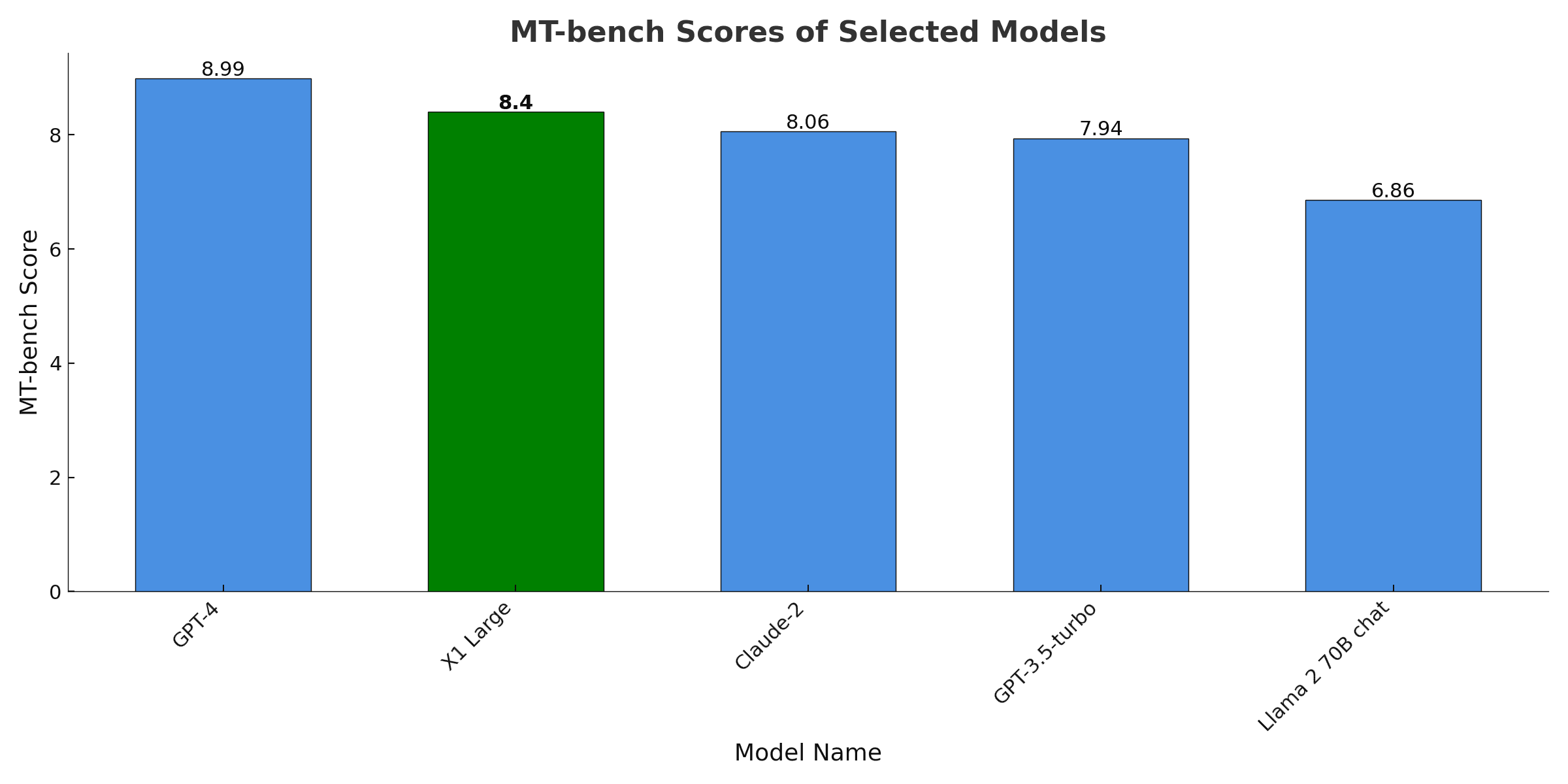

Giga ML makes its own LLMs based on Meta’s Llama 2 AI model and claims to beat certain other LLMs on benchmarks including the MT-Bench test set for dialogs. The startup’s own models are called “X1 Series” and it can easily be tailored to help with tasks such as generating code or asking customer support questions such as “When is my order going to arrive?”

However, assessing the qualitative aspects of X1 and how it stacks up against others remains a challenging task. TechCrunch was unable to test the capabilities of Giga ML through its online demo due to technical issues. The app kept timing out on any prompt.

It is worth mentioning, however, that Giga ML’s focus is not on creating the best-performing LLM, but rather on allowing businesses to fine-tune AI models according to their needs without having to rely on third-party platforms and resources.

Vummadi said: “Giga ML’s mission is to help enterprises safely and efficiently deploy LLMs on their own on-premises infrastructure or virtual private cloud. Giga ML simplifies the process of training, fine-tuning, and running LLMs by taking care of it through an easy-to-use API, eliminating any associated hassle.”

He also highlighted the privacy benefits of running an LLM offline, a feature that is likely to appeal to many businesses.