xAI, an AI startup led by Elon Musk, has unveiled a preview of its inaugural multimodal AI model, Grok-1.5 Vision. The company claims that this model surpasses its competitors in understanding the physical world.

Grok-1.5 Vision extends beyond traditional text processing abilities, supporting a wide range of visual inputs such as documents, diagrams, graphics, screenshots, and photographs. The model is set to be accessible soon to early testers and existing Grok users.

xAI has positioned its latest model, Grok-1.5V, as a formidable contender among current leading multimodal models. The company emphasizes that Grok-1.5V excels in a broad spectrum of tasks, including multidisciplinary reasoning and the analysis of documents, scientific diagrams, graphics, screenshots, and photographs.

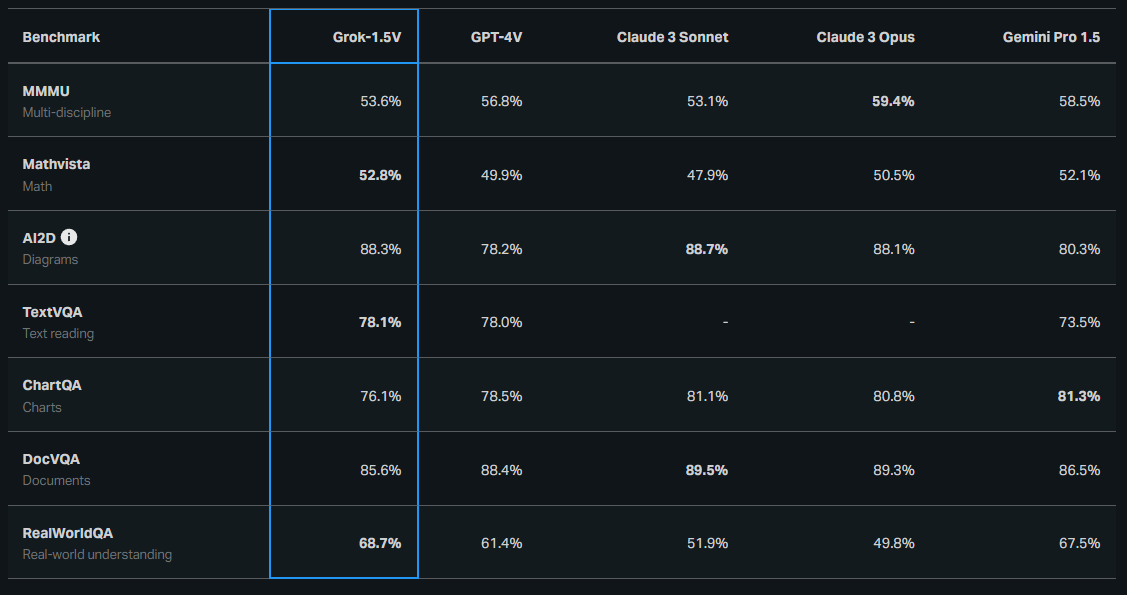

To substantiate these claims, xAI recently released a detailed performance comparison of Grok-1.5V against other prominent models like OpenAI’s GPT-4, Anthropic’s Claude, and Gemini Pro. The data presented indicates that Grok-1.5V has met or surpassed its competitors in the majority of tested areas.

Using the newly implemented RealWorldQA benchmark, xAI has highlighted how Grok is able to understand the physical world. This benchmark assesses a model’s spatial understanding of the real environment and it rated Grok above several of its rivals using only zero shot prompts, which present no example solutions in the prompt.

xAI has shared samples of Grok understanding Python code through an image showing a flowchart. The AI model was successfully able to describe that the code is about a guessing game between a computer and a player. This shows Grok’s ability to understand diagrams and turn them into code.

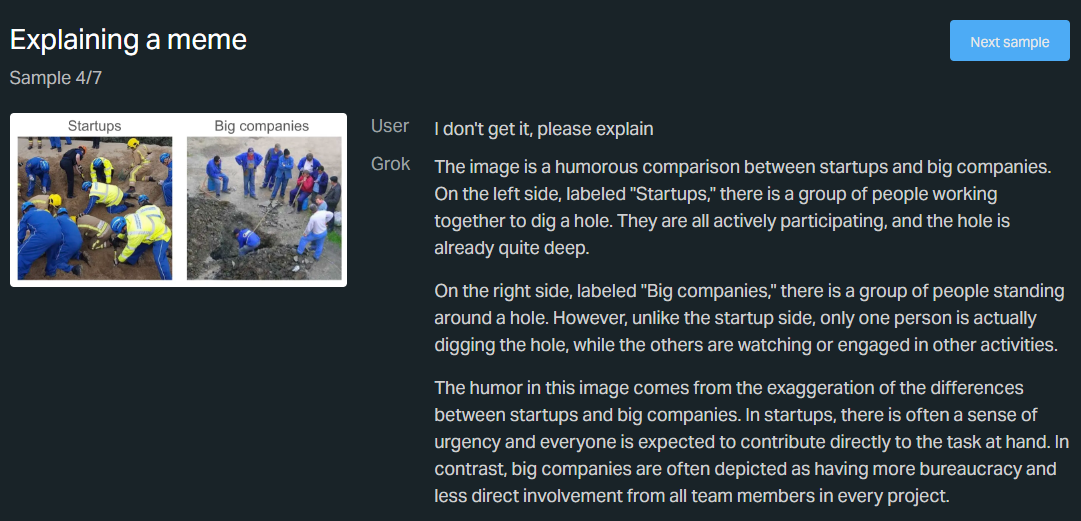

Another example shows Grok explaining a meme comparing employees working at a startup vs employees working in a big company.

Since xAI believes that understanding the physical world is critical for an AI model, the startup developed the RealWorldQA benchmark, designed to test the spatial understanding capabilities of multimodal AI models. While the scenarios presented in this benchmark may seem simple to humans, they pose significant challenges for AI systems, highlighting the complex nature of real-world interactions.

Since Grok 1.5 Vision is still in its preview stages, xAI expects to see significant improvements in the AI model over the upcoming months for various modalities such as images, audio, and video. The company plans to launch Grok 2 in May and Musk says it will be able to beat GPT 4.

Via: The Decoder