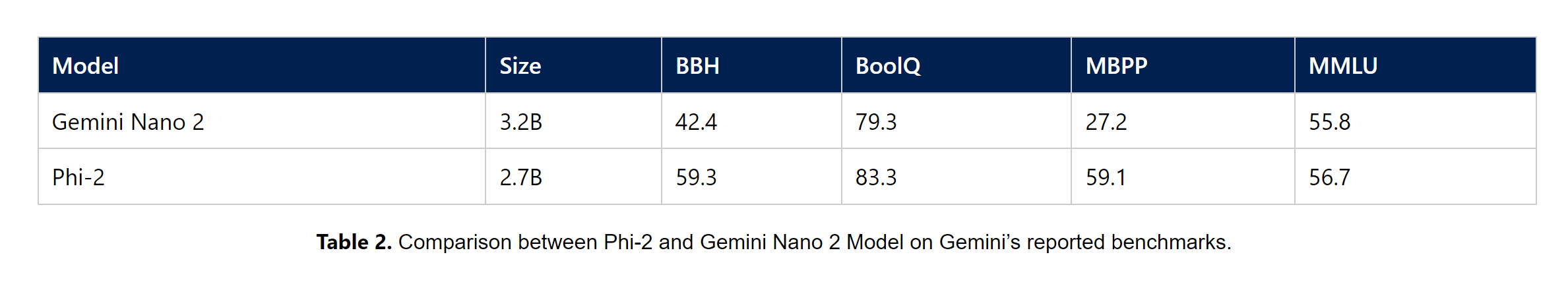

Microsoft recently announced its newest small language model Phi-2, a 2.7 billion parameter AI model meant to compete with Meta’s LLaMA 2, Mistral 7B, and Google’s Gemini Nano. The software company has also shared benchmark results for Phi-2, which exceed Google’s newly announced Gemini Nano.

Compared to the smallest model in Google’s Gemini family, Microsoft’s Phi-2 exhibits enhanced performance in all the benchmarks that were showcased.

Microsoft has conducted thorough testing using commonly employed prompts. The company said: “We observed a behavior in accordance with the expectation we had given the benchmark results.”

The software giant also claims that in challenging benchmarks, Phi-2 either equals or surpasses models that are up to 25 times larger, owing to breakthroughs in model scaling and the refinement of training data.

Just like Gemini Nano, Microsoft’s Phi-2 is meant to run on end devices. This text-to-text program is small enough to run on a laptop or a mobile device. For now, Phi-2 is currently available in the Azure AI model catalog to “foster research and development on language models”, while Gemini Nano is powering the Google Pixel 8 Pro smartphone.

Microsoft did not miss the opportunity to throw a jab at Google’s now highly criticized Gemini demonstration video, showcasing how Phi-2, a small language model, can handle the same queries as Gemini Ultra, despite being less than half the size. Gemini Ultra is Google’s most advanced AI model yet and it provided an image for the prompt while Phi 2 did it with just text input.

Despite the impressive results, the license for Phi-2 is exclusively for “research purposes only” and not for commercial use, as stipulated by a custom Microsoft Research License. This license specifies that Phi-2 may only be utilized for “non-commercial, non-revenue generating research purposes.” Therefore, businesses intending to develop products using it will not be able to do so.

Regardless, Phi 2 exhibits significant enhancements in logical reasoning and safety compared to Phi-1.5. The company also noted that with appropriate fine-tuning and customization, this smaller language model proves to be an effective tool for both cloud and edge applications.