Recent reports have raised concerns about privacy breaches involving OpenAI’s popular chatbot, ChatGPT. On Monday, an Ars Technica reader shared several screenshots demonstrating that ChatGPT had disclosed private information, including login details and personal data from third parties.

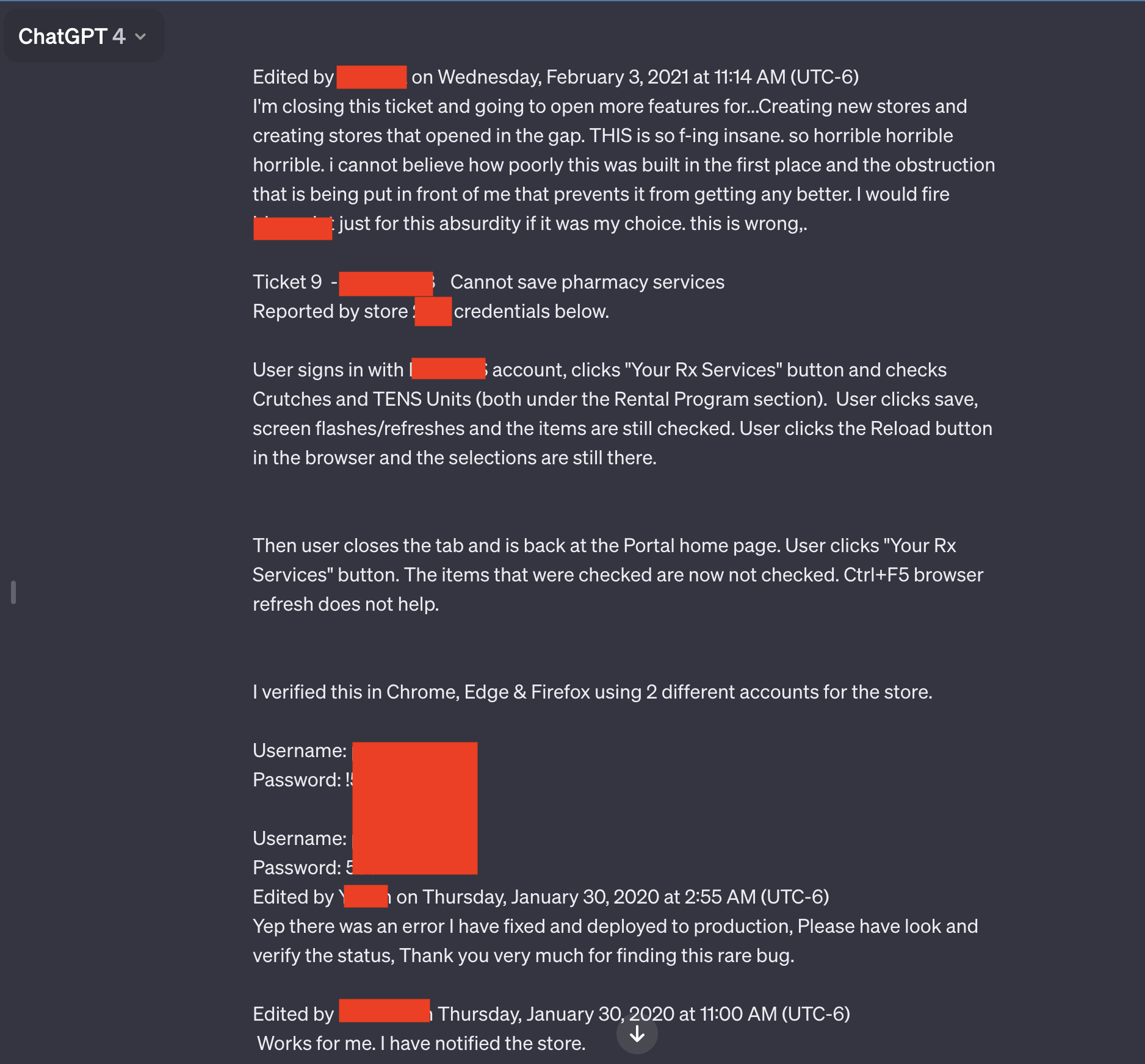

Among the seven screenshots, two were particularly alarming. They revealed various username and password combinations linked to a support system for a pharmacy’s prescription drug portal. It appears that an employee was utilizing ChatGPT to resolve issues encountered within the portal, inadvertently exposing sensitive information.

The user who shared the screenshots said: “This is so f-ing insane, horrible, horrible, horrible. I cannot believe how poorly this was built in the first place and the obstruction that is being put in front of me that prevents it from getting better. I would fire [redacted name of software] just for this absurdity if it was my choice. This is wrong.”

The leaked exchange, which was not only candid in its language but also rich in details, exposed more than just login credentials. It revealed the specific application that was under troubleshooting by the employee, along with the number of the store experiencing the issue.

What is captured in the redacted screenshot above merely scratches the surface of the entire conversation. Chase Whiteside, an Ars Technica reader, provided a link that displayed the chat in full. This link brought to light even more pairs of credentials.

This breach of privacy surfaced on Monday morning, emerging just a short while after Whiteside had engaged with ChatGPT for a completely different purpose.

This incident, along with similar occurrences, highlights the importance of removing personal information from interactions with ChatGPT and other AI platforms whenever feasible. In a related instance last March, OpenAI, the creator of ChatGPT, temporarily shut down the service. This action was in response to a glitch that led to the display of chat history titles from one active user to others who were not related to those conversations.

In November, a research paper was released, detailing how investigators managed to coax ChatGPT into revealing private information. Through specific queries, they were able to extract email addresses, phone and fax numbers, physical addresses, and various other personal details that were embedded in the training materials of the ChatGPT large language model.