While advancements in AI image creation have been notable, image processing has historically struggled more often. However, Apple is poised to change this narrative by showcasing a new method that comprehends and executes intricate text commands for image editing, making it smarter overall.

Apple’s latest AI image editing model comes in the form MGIE, which stands for Multimodal Large Language Models Guided Image Editing, created in partnership with researchers at the University of California. This new open-source model can edit images based on simple language descriptions.

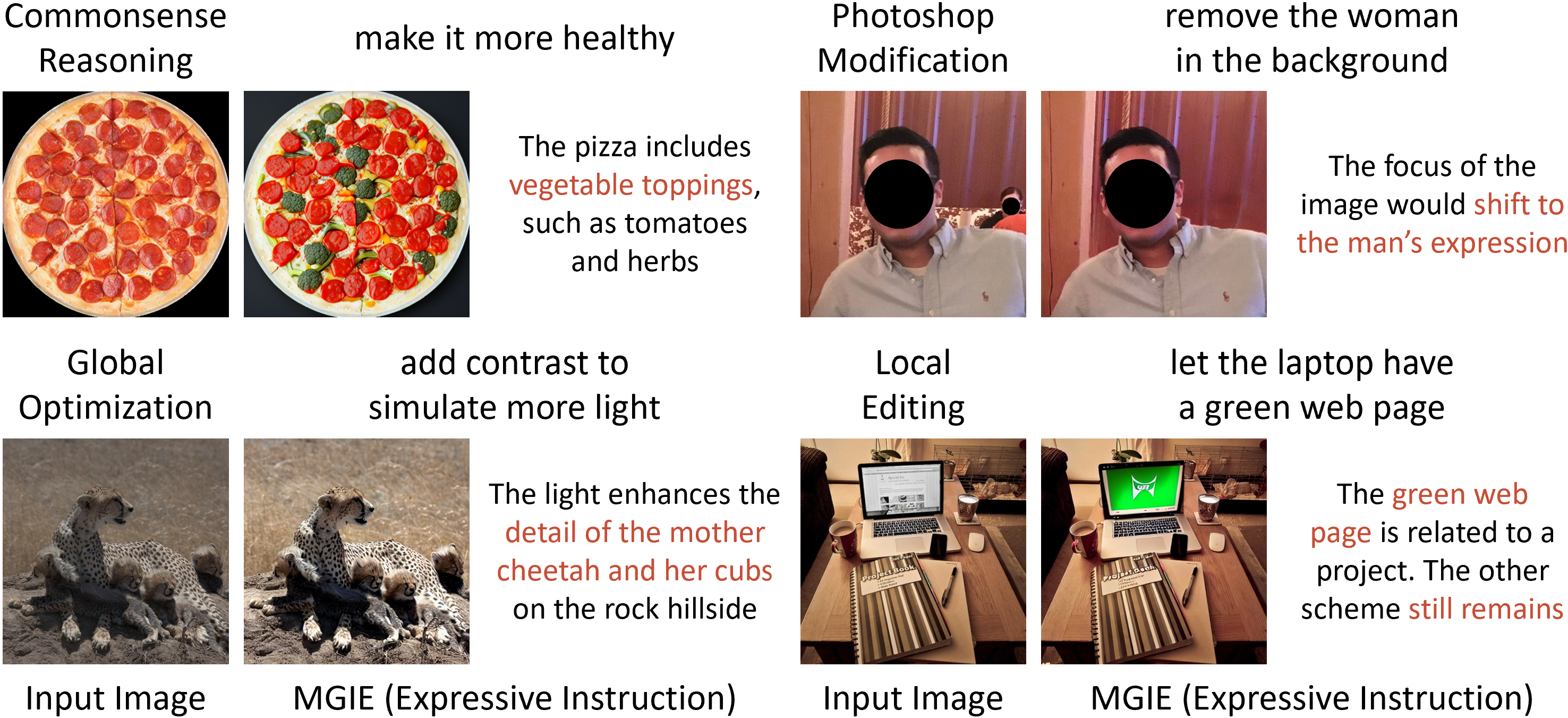

Here are a few example images edited through Apple’s MGIE.

Utilizing Multimodal Large Language Models (MLLMs), MGIE excels in deciphering user commands and executing pixel-precise image alterations. Leveraging the prowess of MLLMs, which can effectively analyze both textual and visual inputs, MGIE represents a significant stride in image processing technology. This approach builds upon the success of applications like ChatGPT, which employs GPT-4V to comprehend images, and DALL-E 3 to generate new ones.

Thanks to these capabilities, Apple’s MGIE allows users to perform tasks as simple as color adjustment all the way to complicated projects such as object manipulation. MGIE boasts of enabling these features for the first time.

MGIE also boasts the capability to execute both global and local image manipulations as well as expressive instruction-based editing, facilitating common Photoshop-esque alterations like cropping, scaling, rotating, mirroring, and applying filters.

MGIE boasts the capability to execute both global and local image manipulations with finesse. This versatile model enables expressive instruction-based editing, facilitating common Photoshop-esque alterations like cropping, scaling, rotating, mirroring, and applying filters.

Moreover, MGIE demonstrates proficiency in comprehending intricate commands such as altering backgrounds, adding or removing objects, and seamlessly merging multiple images.

This model aims to resolve the problem of user prompts often being too short and devoid of sufficient descriptions. To overcome this problem, the model has been trained to add a preface to the user’s instruction such as “What will this image be like if” around the prompt. This allows MGIE to create a very detailed prompt by itself.

These prompts tend to become too lengthy at times, which is why there is a separate pre-trained model that can shorten them. For instance, terms such as “desert” are correlated with concepts like “sand dunes” or “cacti”. Subsequently, the model proceeds to generate the image. OpenAI utilizes a comparable methodology with DALL-E 3 in ChatGPT.

MGIE can be accessed through GitHub as well as Hugging Face through a demo.