Researchers at the Stanford Internet Observatory (SIO) conducted a study that discovered thousands of child sexual abuse (CSAM) images in an open dataset used for training AI models.

The dataset is called LAION-5B and it was used to train Stability AI’s popular open-source Stable Diffusion image generator as well as Google’s recently announced Imagen and Parti. The dataset includes billions of images, some of which are linked to social media or explicit websites. At least 1,008 of these images contained child sexual abuse material. According to the report, the LAION-5B dataset also includes thousands of other suspected cases of CSAM.

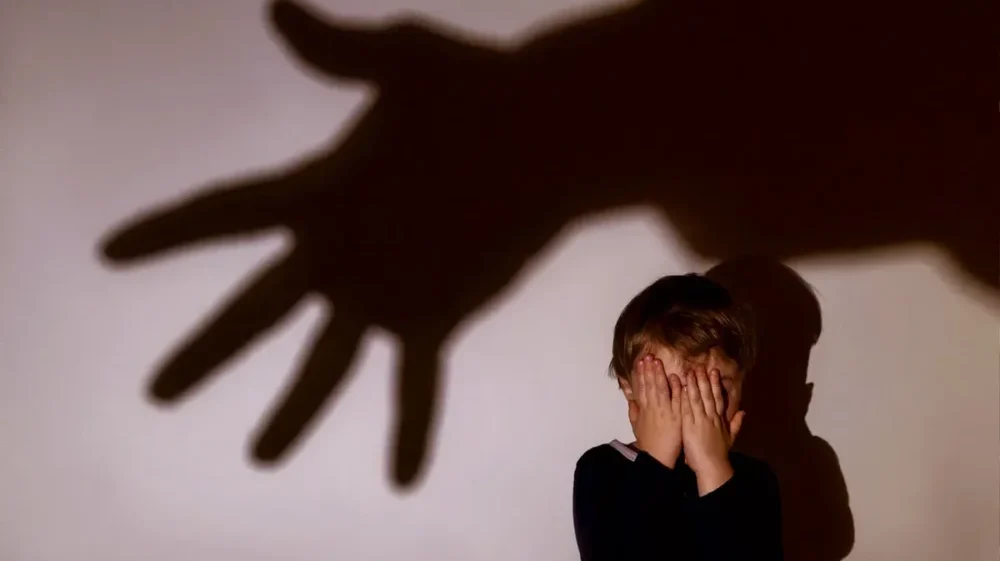

The inclusion of Child Sexual Abuse Material (CSAM) in training datasets raises serious concerns about AI-driven products, like Stable Diffusion, inadvertently generating new and disturbingly realistic child abuse imagery.

The report highlights that image generators built on Stable Diffusion 1.5 are at a heightened risk of producing such images and recommends halting their distribution. The subsequent iteration, Stable Diffusion 2.0, is deemed to be safer as its training data from the LAION dataset has undergone stricter filtering to remove harmful and prohibited content.

In a worrying development reported by the Internet Watch Foundation (IWF) in late October, there has been a significant increase in AI-generated CSAM. Analysts from IWF discovered over 20,000 AI-created images on a single CSAM forum in the dark web within just one month. Compounding the problem, the increasing realism of AI-generated CSAM poses greater challenges in distinguishing and investigating actual cases of child abuse.

The German non-profit LAION has since removed the dataset in question as well as others from the internet for the time being. LAION claims that it has a “zero tolerance policy” for illegal content and their datasets will be cleaned up before they are published again.

URLs for the child abuse imagery have been reported to the child protection agencies in the US and Canada, as per the report from Stanford. The Internet Observatory believes that image detection tools such as Microsoft PhotoDNA should be used to cross-check future datasets against illegal content and organizations should work with child protection agencies to prevent similar incidents.

Previously, the LAION-5B dataset has faced criticism for including patient images. For those curious about the contents of this dataset, the “Have I been trained” website offers an insight into what it encompasses.