Groq, not Elon Musk’s Grok, but an AI startup Groq has announced a new hardware breakthrough through its new AI processing chip which can run Large Language Model (LLMs) at unprecedented speeds.

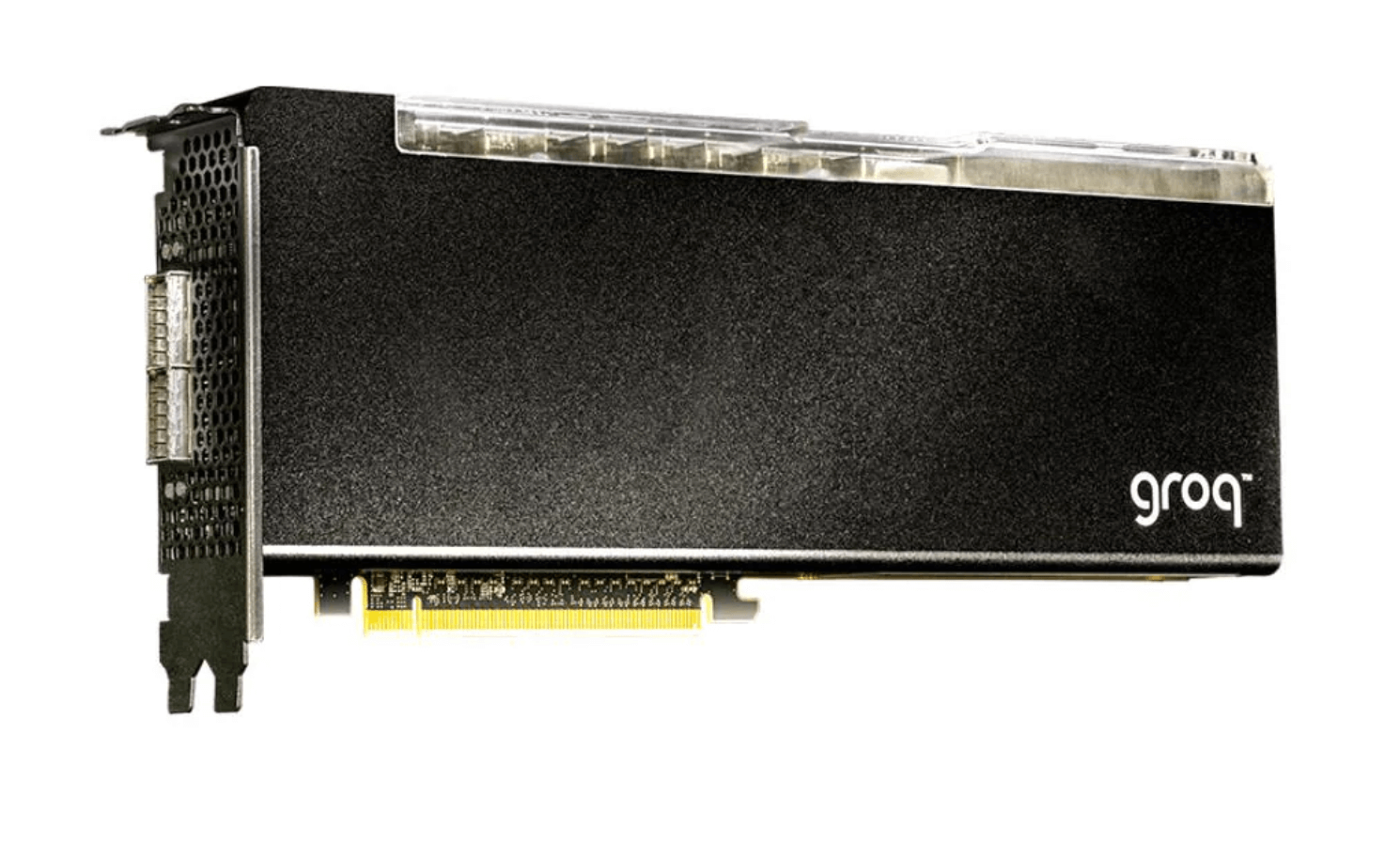

The new $20,000 Groq AI chip uses an LPU (language processing unit) architecture instead of a GPU (graphics processing unit), which is prevalent in the AI industry due to its ability to power LLMs. Groq’s LPU Inference Engine has set a new record in processing efficiency for LLMs with nearly instantaneous response times.

This was recently revealed through a benchmark by ArtificialAnalysis.ai, in which Groq’s LPU managed to outperform eight other competitors in multiple areas including latency vs. throughput and total response time. Groq’s hardware hit remarkable speeds of 500 tokens per second, compared to 30-50 for GPT 3.5.

As for throughput performance, the Groq LPU hit a score of 241 tokens per second, which was also far ahead of its rivals. Achieving a performance rate that is twice as fast as its competitors, this breakthrough paves the way for new opportunities in utilizing large language models across a range of sectors. Groq’s internal benchmarks underscore this milestone, boasting speeds of up to 300 tokens per second—a feat that older technologies and established market players have not approached.

To put this into perspective, Groq was also put to the test against GPT 3.5 in a side-by-side analysis in which Groq processed the same prompt 4 times faster than its competitor.

Technical Specifications

In terms of technical specifications, the GroqCard™ Accelerator comes with up to 750 TOPs (INT8) and 188 TFLOPs (FP16 @900 MHz) of performance capacity. It also features 230 MB SRAM per chip and boasts an on-die memory bandwidth of up to 80 TB/s, surpassing the capabilities of standard CPU and GPU configurations, especially in tasks involving large language models (LLMs).

This remarkable increase in efficiency is credited to the LPU’s proficiency in drastically cutting down the computation time per word and mitigating the challenges posed by external memory constraints, thus facilitating quicker generation of text sequences.

Grasping the velocity at which Groq operates is something that almost demands firsthand experience to fully appreciate. The near-instantaneous responses it delivers unlock a realm of possibilities for artificial intelligence and user interactions. Moreover, the efficiency and cost-effectiveness of LPUs present a compelling substitute to the highly sought-after GPUs currently dominating the market.

Users can try out Groq’s new hardware on open-source LLMs here.