At Nvidia’s recent GTC 2024 keynote, generative AI not only received tons of PR hype but also a major computational boost thanks to the company’s latest and greatest AI chips, the Blackwell GPUs. These AI chips are said to be the world’s most powerful AI hardware that will supercharge AI training and save costs 25 times better than before.

Nvidia’s CEO, Jensen Huang, views Blackwell as the catalyst for an emerging industrial revolution. He suggests that the platform will facilitate generative AI applications, supporting extensive language models with capacities reaching several trillion parameters.

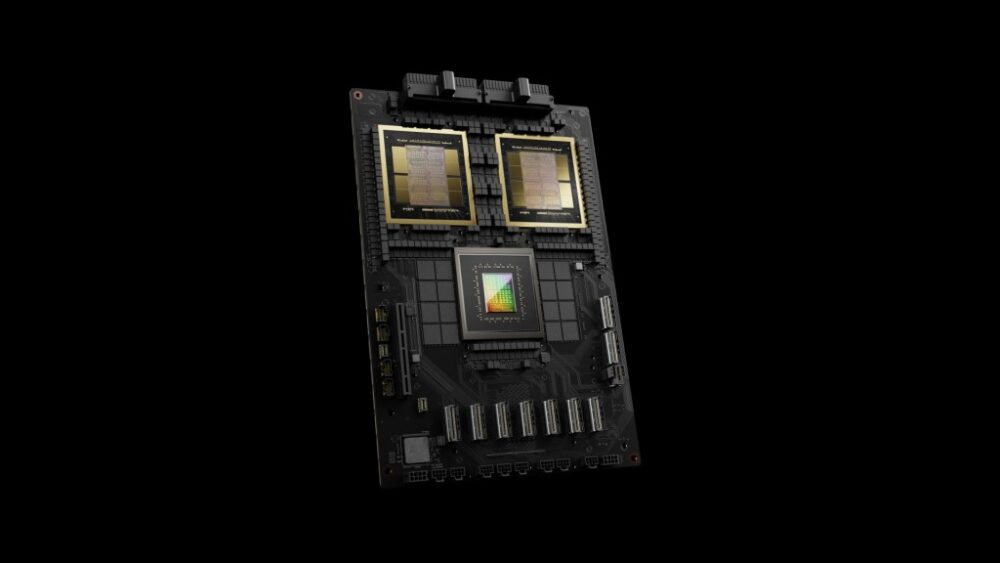

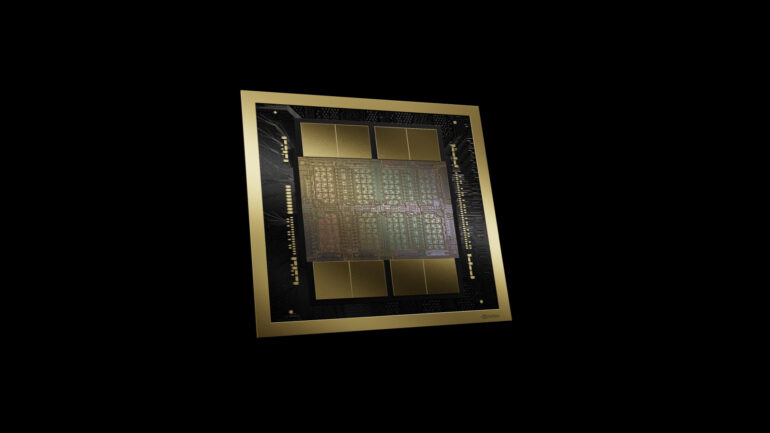

The new Blackwell GPUs consist of a whopping 208 billion transistors and are based on two dies of TSMC’s cutting-edge 4NP chip manufacturing process which enables significantly enhanced power efficiency and computing power. This also allows these GPUs to operate as a standalone CUDA GPU since they are capable of 10TB/second connection speeds.

Furthermore, Blackwell is equipped with an upgraded Transformer Engine, marking its second generation, which powers AI applications with FP4 precision. It also boasts improved NVLink communication technology, allowing data transfer among as many as 576 GPUs, alongside the introduction of a new RAS Engine designed for AI-driven predictive maintenance and more.

There are 192 gigabytes of HBM3e memory onboard and these GPUs are able to deliver 10 petaFLOPS in FP8 and 20 petaFLOPS in FP4 of AI computational power. Better yet, these AI chips can achieve twice the computing power, twice the model size, and twice the bandwidth through the new Transformer Engine with so-called “Micro Tensor Scaling.”

All of these hardware performance upgrades translate to 4x the training performance, up to 25x the power efficiency, and up to 30x the inference performance compared to Nvidia’s H100 GPUs, which are largely used by major AI companies running large-scale LLMs such as OpenAI.

This move signals Nvidia’s proactive stance against the rising competition from inference-centric chips that are actively attempting to carve out a portion of the market dominance Nvidia currently enjoys.

These new Blackwell GPUs are soon to be expected in major cloud provider machines. Companies such as Amazon Web Services, Google, Meta, Microsoft, and OpenAI will be among the first to deploy Blackwell.

Via: The Decoder