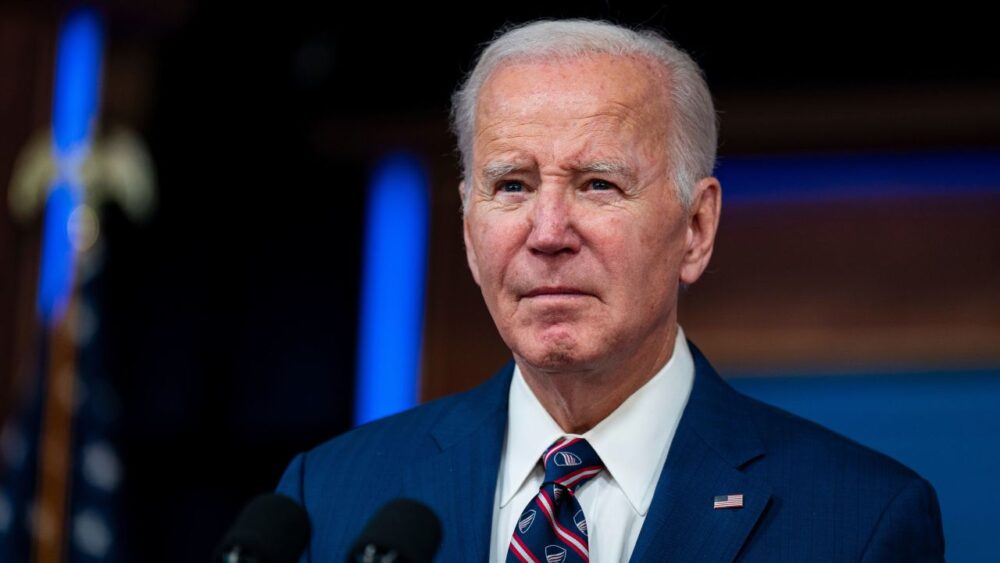

US President Joe Biden has issued a new executive order to address AI safety and security concerns around the world with new standards.

As per the requirements of this order, companies involved in the development of foundational AI models will have to notify the federal government of their work. They will also have to share the results of all safety tests before making their models publicly available.

Following the popularization of highly capable Large Language Models (LLMs) such as ChatGPT and Google Bard, there have been global debates regarding the need for safeguards to mitigate the potential downsides of ceding excessive control to algorithms.

Earlier this year in May, the Group of Seven (G7) countries came together to discuss critical issues that need to be addressed within the framework of the Hiroshima AI Process. Yesterday, the seven member nations came to an agreement on guiding principles and a “voluntary” code of conduct for AI developers to follow, which would ensure AI safety and regulation.

The United Nations (UN) took a similar approach to deal with this matter last week. The organization formed a new board dedicated to exploring AI governance. Meanwhile, the UK is hosting a global summit this week to talk about AI governance. The summit has also invited U.S. Vice President Kamala Harris to speak at the event.

The Biden-Harris Administration has placed a strong emphasis on AI safety, initially seeking “voluntary commitments” from prominent AI developers such as OpenAI, Google, Microsoft, Meta, and Amazon. These voluntary agreements have laid the groundwork for the executive order being formally unveiled today.

The order also requires developers working on the “most powerful AI systems” to disclose their safety test results and associated data to the U.S. government.

The order notes that: “As AI’s capabilities grow, so do its implications for Americans’ safety and security. The order is intended to protect Americans from the potential risks of AI systems. These measures will ensure AI systems are safe, secure, and trustworthy before companies make them public.”

Not just that, but the executive order also discusses plans to develop tools and systems that would help with AI safety and make it more trustworthy. The National Institute of Standards and Technology (NIST) will be responsible for forming standards “for extensive red-team testing” ahead of release