Update: Google DeepMind’s VP of Research Oriol Vinyals has since clarified on a social media post that the demonstration video for Gemini is shown to display how interactions with the AI model “could” look like. “We made it to inspire developers”, he said.

New product launches getting mixed reviews is a common occurrence, but Google’s reputation and customer trust may be on the line when it comes to the recent Gemini AI model launch. This is because Google faked Gemini’s best demonstration video to exaggerate the AI model’s capabilities, reported Bloomberg.

The demo video in question is called “Hands-on with Gemini: Interacting with multimodal AI” and it managed to gain more than 1 million views in a day. The impressive footage shows how the powerful AI model is seamlessly able to take input from text and media alike and can be flexible and responsive at the same time.

But as it turns out, the video is faked to misrepresent Gemini’s skills.

Bloomberg was the first to point out how there was a discrepancy in the demo video. This is because Google itself says: “We created the demo by capturing footage in order to test Gemini’s capabilities on a wide range of challenges. Then we prompted Gemini using still image frames from the footage, and prompting via text.”

This means while Gemini was shown to be highly impressive in the video, it was, in fact, just working with highly tailored text prompts to make tasks simpler for the AI. These text prompts were carefully selected and shortened to misrepresent what the interaction is actually like.

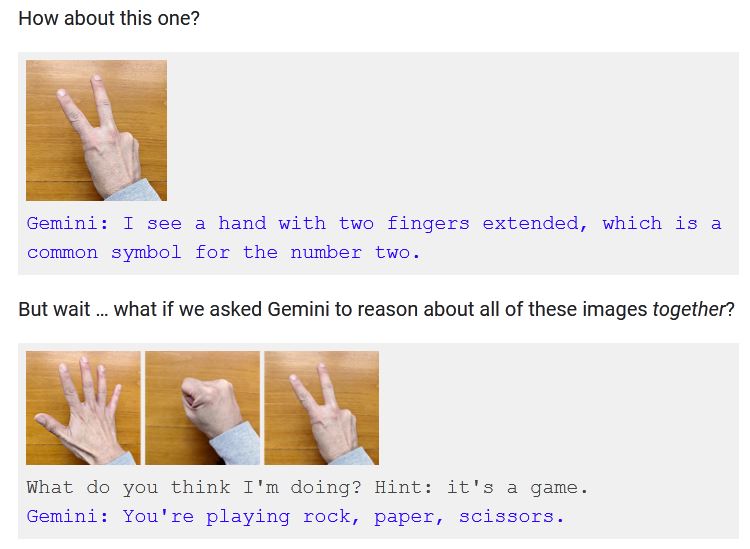

For instance, in Google’s demonstration video, a silent user is making different hand gestures for the rock, paper, scissors game and Gemini is quickly able to guess what it’s doing. In reality, this was not how Gemini was able to guess behind the scenes.

Based on Google’s own documentation, Gemini is not able to reason simply based on a user’s gestures. It needs to be shown all hand gestures at the same before it can guess what is happening. But it doesn’t end there. Gemini also needed a clear hint before it was able to guess. The actual prompt had all three gestures at the same time and a clear-cut hint of what was going on.

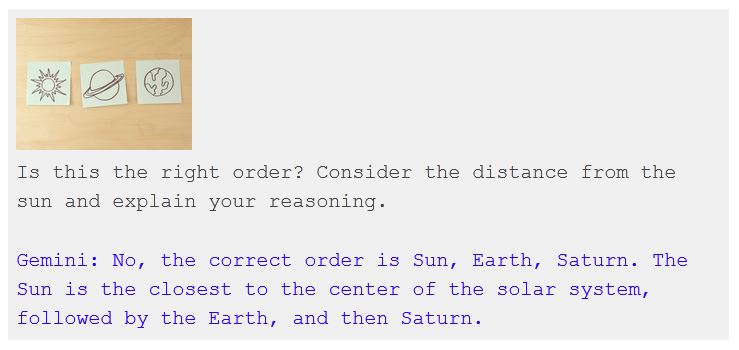

Another example would be the sticky notes demonstration, where Gemini was shown a sketch of the sun, Saturn, and Earth, and asked “Is this the right order?”. Gemini, in the video, was promptly able to provide the right order.

The actual prompt used for the demo was, in fact, a lot more elaborate and easy to work with.

The fact that Gemini was able to get it right or wrong is irrelevant as the concerning point is how much help it needed to do so. The AI model’s capabilities are clearly exaggerated in the demonstration video, but even that would have been acceptable had Google clarified that “This is a stylized representation of interactions our researchers tested.”

The only way to clarify the situation would be to wait for Gemini to become publicly available for everyone to test and verify Google’s demos.