ChatGPT has passed various medical exams since its launch in November of last year, including the US Medical Licensing Exam. As a result, it’s no surprise that many of its users are increasingly relying on it to seek answers to their health-related questions. While the AI chatbot may occasionally make errors, it often assists users in identifying diagnoses and providing appropriate treatment recommendations.

Nevertheless, it is crucial to emphasize that users should not solely depend on it and should always consult a healthcare professional for guidance before implementing any treatments or diagnoses it suggests.

Sometimes, however, even seasoned doctors may occasionally provide incorrect diagnoses, as exemplified in the case of a four-year-old boy who endured chronic pain, a condition that 17 doctors failed to diagnose correctly until ChatGPT came to the rescue.

In the midst of the COVID-19 pandemic, a mother, who prefers to be identified only as Courtney to protect her family’s privacy, faced a medical mystery that had baffled medical professionals for three years. Her four-year-old son, Alex, was experiencing chronic pain that disrupted his life and left him unable to engage in normal childhood activities.

The troubling journey began innocently enough. During the lockdown, Courtney had purchased a bounce house for her two children. However, soon after, Alex’s health took a sharp downturn. He started experiencing debilitating pain, prompting their nanny to administer Motrin daily to alleviate his discomfort.

Alex’s condition took a perplexing turn when he began compulsively chewing on objects. Concerned, Courtney took him to the dentist, setting off a three-year quest for answers to his increasingly distressing symptoms.

Seventeen doctors, three years, and countless medical consultations later, Courtney and Alex remained without a diagnosis that could explain all of the young boy’s symptoms. Frustrated and desperate for answers, Courtney turned to an unlikely source: ChatGPT.

Courtney painstakingly entered all available information about Alex’s symptoms and medical history into ChatGPT, hoping to uncover a hidden clue. The tireless mother had refused to give up, saying, “We saw so many doctors. We ended up in the ER at one point. I kept pushing. I really spent the night on the (computer) … going through all these things.”

In a surprising turn of events, ChatGPT suggested a potential diagnosis: “tethered cord syndrome.” Courtney found this revelation particularly compelling, as it aligned with her son’s symptoms. ChatGPT’s assistance led her to a Facebook group for families dealing with the same syndrome, where she discovered stories mirroring Alex’s experiences.

Empowered by ChatGPT’s suggestion, Courtney scheduled an appointment with a new neurosurgeon, openly sharing her suspicion of tethered cord syndrome. This time, the medical professional took a closer look at Alex’s MRI images and provided the long-awaited confirmation. “She said point blank, ‘Here’s occulta spina bifida, and here’s where the spine is tethered,'” Courtney recalls.

Tethered cord syndrome, as diagnosed in Alex’s case, occurs when spinal cord tissue forms attachments that restrict the cord’s movement, causing abnormal stretching. It is often associated with spina bifida, a congenital condition in which part of the spinal cord doesn’t fully develop, leading to exposure of spinal cord and nerves. In Alex’s situation, his condition was considered “hidden” or “occulta” spina bifida since there was no visible opening in his back.

Following the diagnosis, Alex underwent surgery to correct his tethered cord syndrome. The procedure involved detaching the spinal cord from its tethered position at the base of the tailbone to relieve tension. While still in the recovery phase, Alex’s resilience shines through as he remains an active and joyful child.

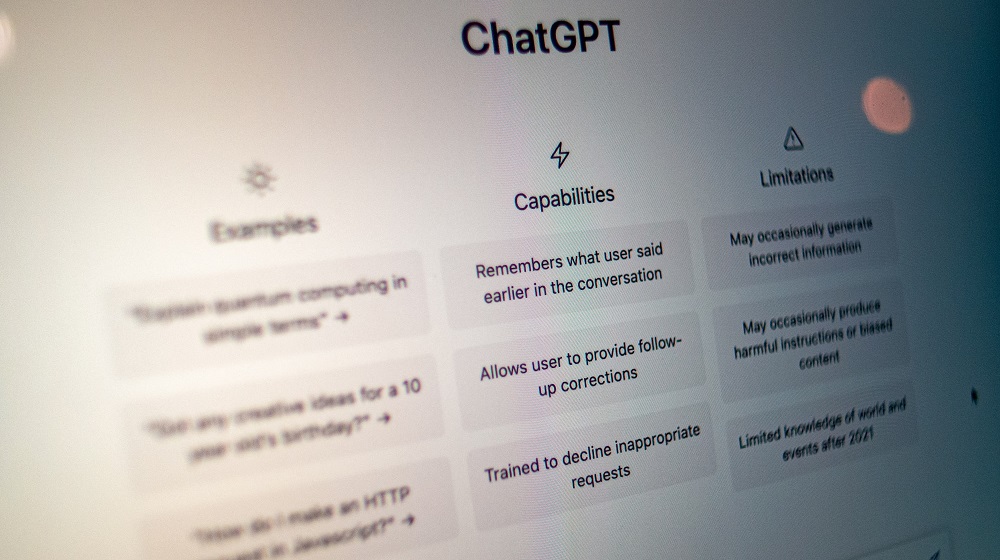

This remarkable journey underscores the potential of AI, like ChatGPT, to assist in diagnosing complex medical conditions. However, it’s essential to reiterate AI’s limitations. ChatGPT, like other AI systems, can occasionally generate incorrect or fictitious information, a phenomenon known as “hallucination.” As a result, it should be used with caution and as a supplementary resource, not a substitute for professional medical expertise.

The rise of generative AI has also led to the development of various other tools that can aid in diagnosis and treatment planning. One such product is Glass Health, which recently secured a $5 million seed but is exclusively intended for use by clinicians.