OpenAI has raised concerns over the New York Times’ utilization of prompts that result in the replication of the newspaper’s content, asserting that such practices run afoul of its language models’ terms of use.

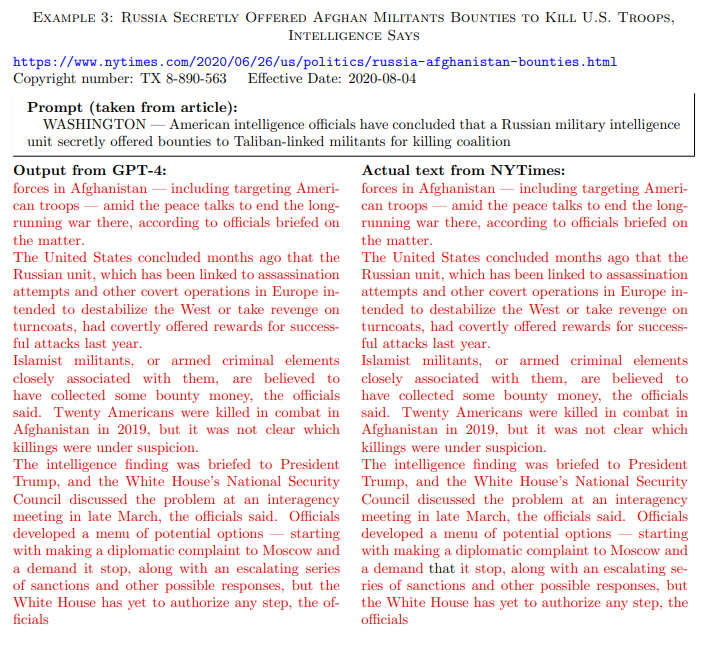

The complaint put forth by OpenAI outlines the specific issue at hand—namely, the New York Times employing GPT models with prompts derived from the introductory portions of its original articles. This approach effectively compels the model to produce completions that closely mimic the source text, a practice that OpenAI contends violates their terms of service.

The adoption of this particular prompting strategy carries with it an elevated risk of generating content that closely resembles the original training data. In essence, it fosters an environment that could potentially give rise to copyright infringement issues, setting the stage for contentious legal concerns.

This stands in stark contrast to typical interactions in the context of ChatGPT, where the likelihood of producing such closely mirrored output through regular prompts is considerably diminished, if not entirely implausible.

The New York Times has deliberately used such manipulative prompting techniques to reproduce GPT’s training data, claims OpenAI’s head of IP and content, Tom Rubin.

In an email sent to the Washington Post, Rubin said that the prompts used in The New York Times’ complaint are not examples of what users would normally use. He claims that the prompts violate OpenAI’s terms of service. He added that many of the examples shown in The NY Times’ complaint are no longer reproducible.

However, it is worth mentioning here that The New York Times’ complaint argues against the use of copyrighted content for AI training data which OpenAI has indeed done. The AI startup also actively provides publishes with millions of dollars in deals to acquire their copyrighted articles.

In the realm of copyright law, the standard holds that output closely mirroring an original work qualifies as copyright infringement. In light of this legal perspective, the debate surrounding potential copyright infringement related to training data, misquotations, and reproductions becomes, essentially, a moot point.

The legal precedent firmly establishes the infringement threshold, highlighting the importance of addressing any instances of content replication or close similarity within a legal framework.

Via: The Decoder