American chip-making giant Nvidia has worked with the University of Toronto and MIT to develop a new AI tool called Align Your Gaussians (AYG) that can create 3D animations from simple text prompts.

Align Your Gaussians (AYG) introduces a novel approach to representing 3D shapes through an assembly of 3D Gaussian functions. This technique involves the use of deformation fields to model the movement of these Gaussians over time, thereby creating animations. Recently, these “3D Gaussians” have gained attention as a potential substitute for the widely-used NeRFs in the realm of 3D modeling.

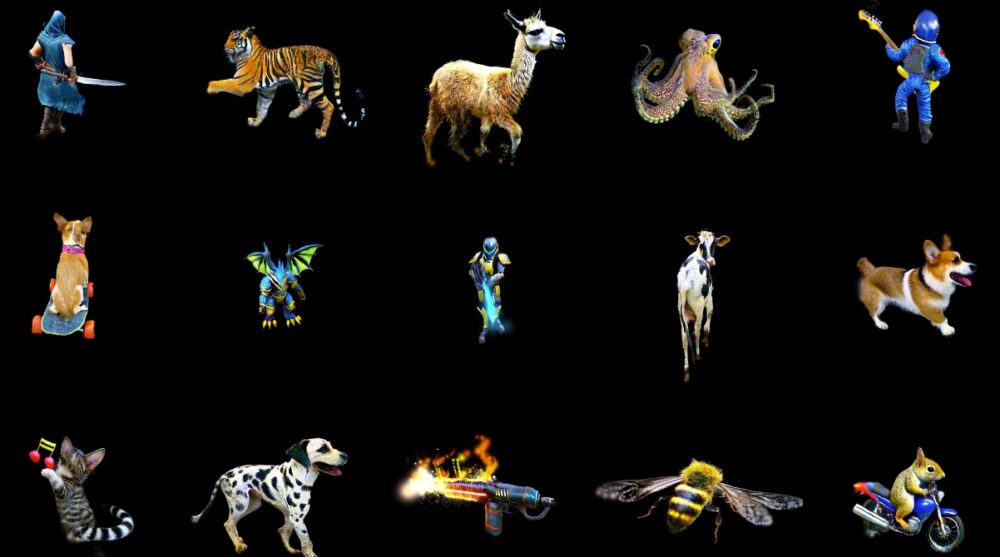

The video below shows what Nvidia’s Align Your Gaussians (AYG) is capable of.

Nvidia’s AYG tool is powered by multiple AI models including Stable Diffusion’s text-to-image model for achieving realistic imagery in each frame. There is also an unnamed text-to-video AI model at work behind the scenes that creates smooth motion using temporal feedback. This model has been trained on a vast dataset of videos.

Additionally, to maintain geometric accuracy from different perspectives, a multi-view 3D model is applied, adapting seamlessly to the shapes of the objects being generated.

This synergistic approach in training allows AYG to finely tune the representation of 3D shapes and the deformation fields. As a result, it can produce animations that are not only dynamic and full of life but also display realistic textures and maintain geometric integrity. This is all achievable from simple textual inputs like “a horse galloping across a meadow”.

The researchers behind AYG also highlight its ability to adapt to new concepts, ones that it wasn’t exposed to during its training phase.

AYG includes novel features such as being able to extend and interconnect animations over longer durations that are not typically possible with current-generation text-to-video models. An example video below shows dogs seamlessly transitioning from walking to barking animations.

The researchers behind AYG believe that this feature could be used to create true 4D scenes and simulations in the future. This could be used to create synthetic data, which is often used when training data is limited, such as in the development of autonomous driving technologies.

A unique feature of AYG is its ability to incorporate multiple animated objects within a single scene, an aspect that sets it apart from other methods. The researchers showcase this capability in a scenario featuring various animated characters congregated around a campfire.